Pentagon Demands Unrestricted AI Models on Classified Networks Amid Ethical Concerns

The U.S. Department of Defense is pressuring top AI firms—including OpenAI, Anthropic, Google, and xAI—to deploy unfiltered large language models on classified military networks, bypassing standard safety protocols. The move, aimed at enhancing operational intelligence and decision-making, has sparked intense debate over AI ethics, security, and accountability.

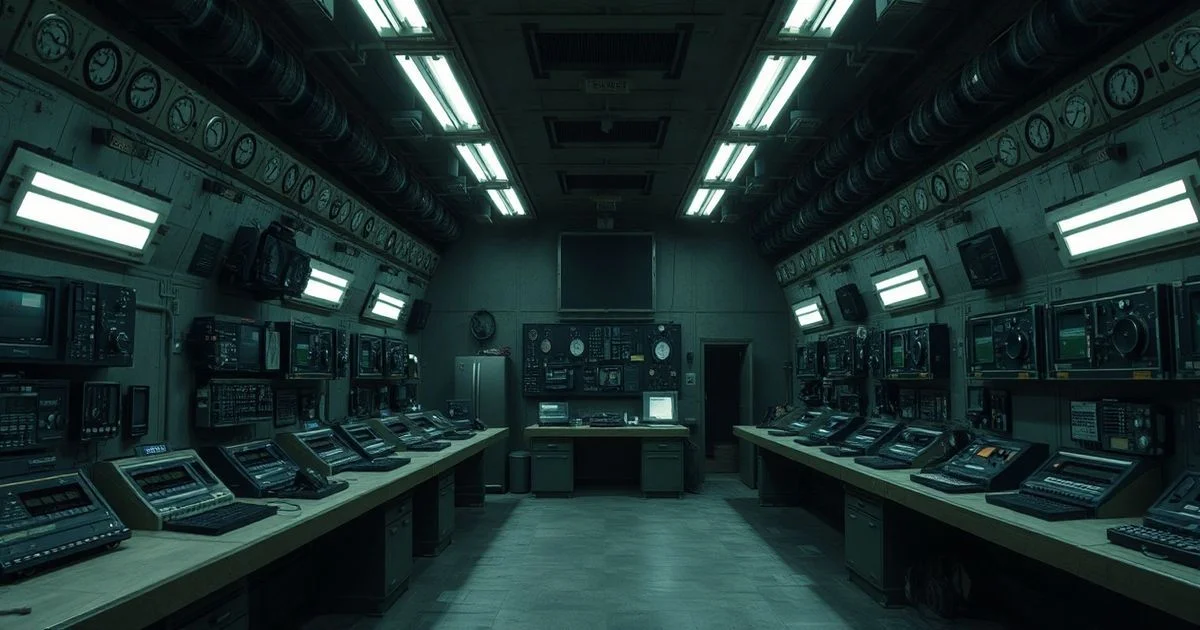

The U.S. Department of Defense is accelerating its integration of artificial intelligence into classified military operations by demanding that leading AI companies remove usage restrictions on their most advanced models, according to multiple sources. In a stark departure from public-facing AI governance, the Pentagon has reportedly requested that firms like OpenAI, Anthropic, Google, and xAI deploy unrestricted versions of their models on secure, air-gapped military networks—bypassing content filters, alignment safeguards, and ethical guardrails typically enforced in civilian applications.

According to Blockonomi, the initiative, internally referred to as Project Stargate, seeks to unlock the full analytical and predictive capabilities of generative AI for intelligence analysis, battlefield logistics, cyber defense, and real-time battlefield decision support. The goal, officials say, is to outpace adversarial nations in AI-driven warfare. "We’re not asking for toys," said a senior Defense Department official speaking anonymously. "We’re asking for tools that can process classified satellite imagery, decrypt enemy communications, and simulate complex conflict scenarios without being hamstrung by corporate compliance protocols."

Technology.org corroborates the urgency of the initiative, describing the Pentagon’s request as an effort to deploy AI "without parental controls" on its most sensitive networks. The article highlights that current AI safety mechanisms—designed to prevent misinformation, bias, or harmful outputs—are seen as liabilities in high-stakes military contexts. For instance, an AI model trained to refuse generating tactical plans involving civilian infrastructure may inadvertently obstruct mission-critical operations in urban warfare scenarios. Military analysts argue that in dynamic combat environments, the cost of hesitation outweighs the risk of erroneous outputs.

However, the move has drawn sharp criticism from AI ethics experts and former government advisors. "Deploying unfiltered AI on classified networks is like handing a nuclear launch code to a child who’s never been taught the difference between right and wrong," said Dr. Elena Vasquez, a former White House AI policy advisor now at the Center for Security and Emerging Technology. "These models are trained on massive, unvetted datasets. There’s no guarantee they won’t hallucinate intelligence, leak classified data through inference attacks, or be manipulated via adversarial prompts."

Internal Pentagon documents obtained by investigative journalists indicate that the agency is offering lucrative contracts and access to classified data sets as incentives for AI firms to comply. OpenAI and Anthropic have reportedly resisted, citing internal ethical review board mandates. Google, meanwhile, is reportedly exploring a "tiered access" model—where restricted versions run on unclassified networks while a separate, sanitized variant operates on classified systems under strict audit protocols. xAI, Elon Musk’s AI venture, has publicly aligned with the Pentagon’s stance, calling the restrictions "a relic of Silicon Valley’s performative ethics."

The historical context of this push is significant. As noted by the U.S. Department of Defense’s Historical Office, the Pentagon has a long-standing tradition of adopting cutting-edge technologies during periods of strategic urgency—from radar in WWII to stealth in the Cold War. But this may be the first time the department has sought to bypass ethical guardrails built into foundational AI systems. Critics warn that normalizing unrestricted AI in military contexts could set a dangerous precedent for global arms races in autonomous weaponry and algorithmic deception.

As of early 2026, negotiations continue between the DoD and private AI firms. Congress has begun hearings on the matter, with bipartisan concern over accountability, oversight, and the potential for AI-induced miscalculations. The outcome may redefine the boundaries of AI governance—not just in defense, but globally.