Local AI Enthusiast Breaks Ground on LTX-2 Motion LoRA Training Without Cloud Costs

A self-taught AI practitioner shares a groundbreaking method for training LTX-2 motion LoRAs locally on consumer hardware, bypassing expensive cloud services. His optimizations enabled successful training on a single RTX 3090 with just 24 videos, setting a new benchmark for decentralized generative AI.

Local AI Enthusiast Breaks Ground on LTX-2 Motion LoRA Training Without Cloud Costs

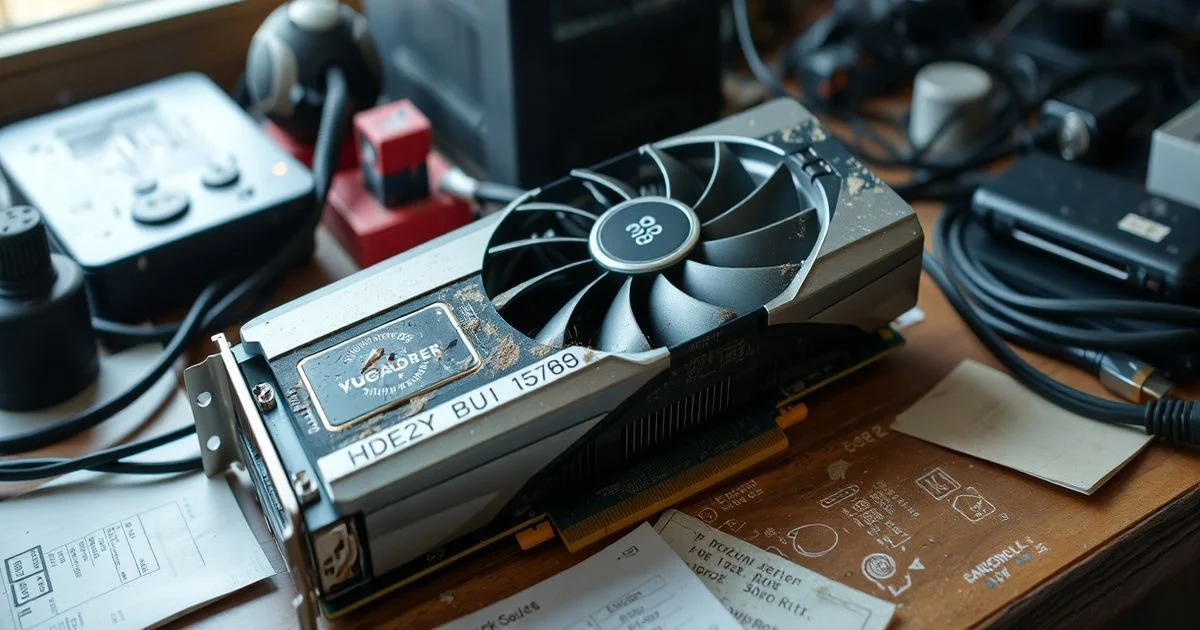

In a remarkable demonstration of grassroots innovation in the generative AI community, a hobbyist developer has successfully trained a motion-specific LoRA model for the LTX-2 video generation model using only consumer-grade hardware — and without relying on paid cloud services. According to a detailed post on Reddit’s r/StableDiffusion, the contributor, known online as u/Plenty-Flow-6926, overcame severe memory constraints on an NVIDIA RTX 3090 to produce a functional motion LoRA using the AI-Toolkit framework on Ubuntu 24.04.

The project was motivated by the absence of a local, open-source equivalent to a popular motion LoRA originally developed for the Wan2.1 model. Rather than pay for cloud-based training services, the enthusiast opted for a fully local workflow, using a dataset of 24 short, silent video clips (each 6 seconds long, 760x1280 resolution). While character-based LoRAs for LTX-2 had previously been trained in under three hours using image datasets, motion LoRAs presented a far more demanding challenge due to the temporal dimension of video data.

Optimizing for Memory: A Technical Masterclass

The initial attempts resulted in frequent Out-of-Memory (OOM) crashes. To combat this, the trainer abandoned the AI-Toolkit’s graphical user interface after generating a base YAML configuration and switched entirely to command-line operations to eliminate unnecessary overhead. A critical breakthrough came from modifying the run.py script by adding the line:

os.environ["PYTORCH_CUDA_ALLOC_CONF"] = "expandable_segments:True"This setting, recommended by PyTorch’s memory management documentation, allows the CUDA allocator to reclaim and reuse fragmented memory blocks more efficiently — a vital optimization for long-running video training jobs.

Further refinements included reducing the number of frames per video from the default 145 to 49 — a strategic cut that still preserved roughly two seconds of motion (at 24 fps), sufficient for the model to learn key movement patterns without overwhelming VRAM. Gradient accumulation was increased from 1 to 4, effectively simulating a larger batch size and stabilizing training dynamics. The learning rate was raised from 0.0001 to 0.0002 to match the increased effective batch size, improving convergence speed without sacrificing stability.

Perhaps most significantly, the text encoder was unloaded during training after its embeddings were cached. This single change freed up several gigabytes of VRAM, allowing the model to dedicate more resources to the video diffusion transformer. The entire process, though demanding, completed in 29 hours — a testament to the feasibility of high-quality motion LoRA training on a single consumer GPU.

Implications for Decentralized AI Development

This case study underscores a growing trend: as generative AI tools become more accessible, users are developing sophisticated workarounds to democratize training capabilities. While commercial platforms like Runway ML and Pika Labs dominate headlines, this effort proves that meaningful innovation can emerge from personal rigs and open-source tooling. The methodology described could serve as a blueprint for other hobbyists, educators, and researchers in regions with limited access to cloud infrastructure.

The trainer’s humility — referring to the result as “half reasonable” — belies the significance of the achievement. By documenting every tweak and trade-off, he has provided a replicable, open-source roadmap for others navigating the same technical hurdles. As LTX-2 and similar models evolve, such community-driven optimizations may become foundational to the next generation of decentralized AI development.

For those interested in replicating the workflow, the full YAML configuration and script modifications are available in the original Reddit thread, with community members already beginning to adapt the approach for other video models.