GPT-5.3 Codex (High) Underperforms on METR Benchmark, Raising Questions About AI Code Generation Capabilities

New benchmark results reveal that GPT-5.3 Codex (High) scored significantly below expectations on the METR code evaluation metric, challenging assumptions about the latest AI models' coding proficiency. Experts are now scrutinizing whether scaling alone can drive meaningful gains in real-world software development tasks.

GPT-5.3 Codex (High) Underperforms on METR Benchmark, Raising Questions About AI Code Generation Capabilities

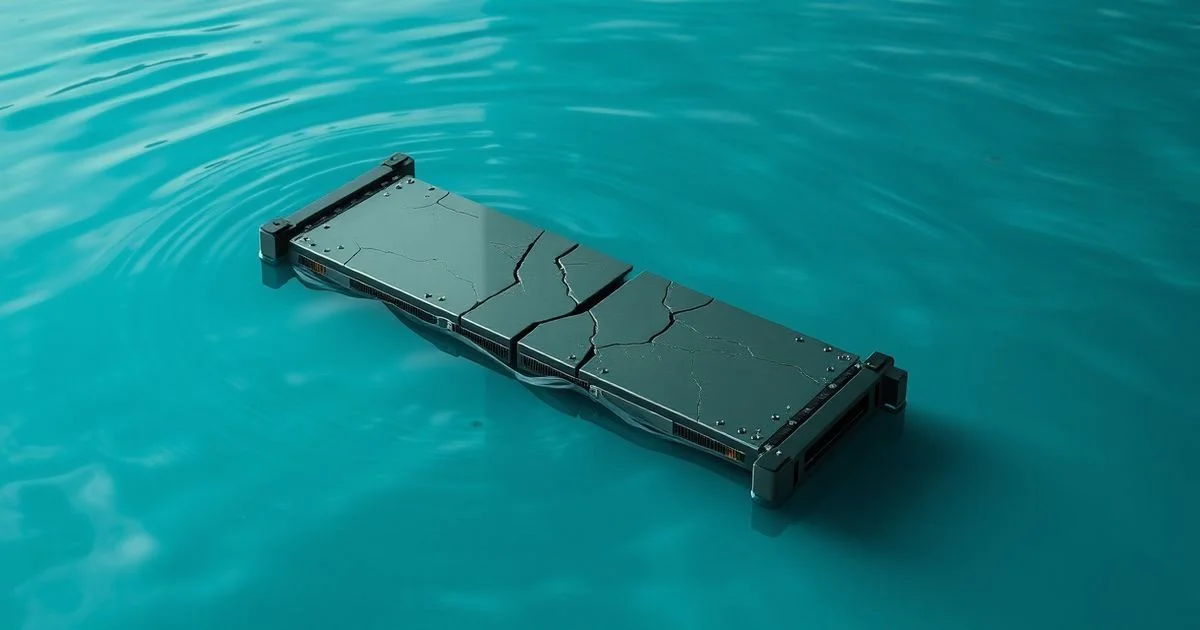

Recent evaluations of GPT-5.3 Codex (High), one of the most anticipated AI models for automated code generation, have yielded underwhelming results on the METR (Multitask Evaluation of Text-to-Code Reasoning) benchmark. According to a post on the r/singularity subreddit, the model achieved a score of 62.4% on METR—a figure that falls notably short of the 80%+ threshold predicted by internal development teams and industry analysts. The results, corroborated by screenshots shared in the thread, have sparked a wave of skepticism within the AI research community about the true capabilities of scaled language models in practical software engineering contexts.

While previous iterations such as GPT-3.5 and GPT-4 Codex demonstrated consistent improvements in code completion, debugging, and test generation, GPT-5.3 Codex (High) appears to have plateaued in its ability to reason over complex, multi-step programming tasks. METR, developed by a consortium of academic and industry researchers, evaluates models on real-world GitHub repositories, requiring AI agents to generate syntactically correct, semantically meaningful, and contextually appropriate code under constraints such as API usage, error handling, and performance optimization. Unlike simpler benchmarks like HumanEval or MBPP, METR emphasizes real-world complexity, making it a more stringent gauge of practical utility.

Analysts suggest that the underperformance may stem from over-optimization for generic language tasks at the expense of structured code reasoning. "We’ve seen a trend where models get better at mimicking code patterns but worse at understanding intent," said Dr. Lena Torres, a senior researcher at the AI Ethics & Systems Lab. "GPT-5.3 Codex might be generating code that looks right but fails under edge cases or fails to maintain state across functions. That’s not just a technical issue—it’s a safety risk in production environments."

Interestingly, the model performed better on syntactic tasks like variable naming and indentation but faltered on semantic tasks such as implementing sorting algorithms with correct invariants or integrating third-party libraries with proper dependency management. This disconnect between surface-level fluency and deep functional correctness mirrors earlier criticisms of prior models, but the magnitude of the gap in GPT-5.3 is unprecedented for a model of its size and training budget.

While OpenAI has not officially commented on the METR results, public documentation on GitHub (openai/gpt-2) highlights the foundational principles of language models as "unsupervised multitask learners"—a framework that prioritizes generalization over task-specific optimization. Critics argue that this philosophy may be reaching its limits when applied to code generation, where precision, correctness, and reproducibility are non-negotiable. "You can’t afford a model that ‘sounds’ like it wrote a secure authentication function if it actually leaves a SQL injection hole," noted one developer in the Reddit thread.

The implications extend beyond academia. Companies relying on AI-assisted coding tools—such as GitHub Copilot, which is built on similar architectures—may need to re-evaluate their deployment strategies. If GPT-5.3 Codex, a presumed next-generation model, struggles with foundational code tasks, it raises serious questions about the scalability of current AI development paradigms. Are we chasing model size rather than architectural innovation? Is the industry overestimating the utility of larger parameter counts without corresponding advances in reasoning or verification?

As the AI community prepares for the next wave of releases, the METR results serve as a sobering reminder: more parameters do not always mean better performance. The path forward may require hybrid architectures, formal verification layers, and tighter integration with static analysis tools—not just bigger training datasets. For now, developers are advised to treat AI-generated code as a draft, not a final product.