Gemini 3.1 Pro Powers Realistic City Planner App, Sparks Debate on AI Human-Likeness

Google's newly released Gemini 3.1 Pro has demonstrated unprecedented reasoning capabilities by generating a fully functional SimCity-style urban planning application in a single prompt. While technical benchmarks praise its performance, users report the AI’s outputs feel increasingly mechanical, raising questions about the trade-off between intelligence and empathy in generative AI.

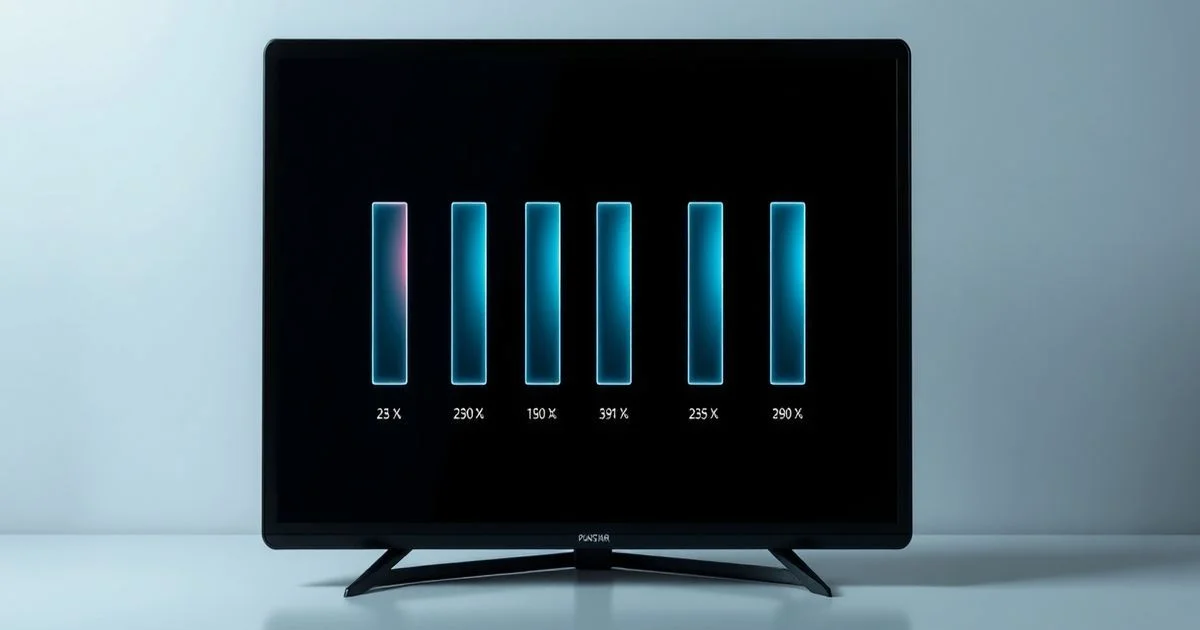

Google DeepMind has unveiled Gemini 3.1 Pro, its most advanced AI model to date, following a series of high-profile demonstrations that showcase its ability to generate complex, real-world applications from simple prompts. According to 36氪, the model successfully created a fully rendered SimCity-style urban planning app with intricate SVG-based visualizations in one go, while also producing a functional prototype of Windows 11—an achievement previously thought to require months of human-engineered development. The model outperformed leading competitors including Claude Opus 4.6 and GPT-5.2 across 12 benchmark tests, particularly excelling in the ARC-AGI-2 general intelligence challenge, a notoriously difficult metric for evaluating abstract reasoning.

The app, which was shared on Reddit by user /u/Wonderful-Excuse4922, allows users to simulate zoning laws, traffic flow, public transit routes, and environmental impact metrics in a dynamic, interactive environment. Developers noted the model generated not only the backend logic but also the frontend UI, complete with responsive design elements and data visualization layers—all from a single natural language instruction: "Build a realistic city planner app with SVG graphics and real-time simulation capabilities."

However, while the technical feats are undeniable, a growing number of users and AI ethicists are expressing concern over the model’s diminished "human" qualities. As reported by MSN, early adopters describe interactions with Gemini 3.1 Pro as "precise but cold," noting that while the AI delivers flawless code and logical structures, it lacks the nuance, humor, or adaptive tone that characterized earlier versions. "It solves problems like a supercomputer, not a collaborator," said one software engineer who tested the model for a municipal planning consultancy. "I got perfect zoning recommendations, but it didn’t ask me about community needs or historical context. It just optimized."

This shift reflects a broader industry trend: as AI models become more capable at reasoning and task completion, they often lose the subtle, conversational elements that make them feel relatable. Google DeepMind has publicly stated that the enhancements in Gemini 3.1 Pro focus on "increased logical fidelity, reduced hallucination, and improved multi-step planning," prioritizing accuracy over personality. Critics argue that in fields like urban planning—where social equity, cultural heritage, and public input are paramount—this trade-off may be dangerous.

Meanwhile, the app built by Gemini 3.1 Pro has already attracted interest from city governments in Europe and North America, with pilot programs being considered in cities like Rotterdam and Portland. Urban planners see potential in using the tool to rapidly prototype policy impacts, but caution against over-reliance. "AI can model traffic congestion or air quality, but it can’t understand why a neighborhood resists a new highway," said Dr. Lena Torres, an urban policy researcher at MIT. "The machine gives us data. We still have to give it meaning."

As Gemini 3.1 Pro enters enterprise and public-sector deployment, the debate intensifies: Is an AI that can build a city better than one that understands its people? The answer may determine not just the future of AI development, but the kind of cities we choose to build with it.