Open-Source Tool onWatch Revolutionizes AI API Quota Management Across Major Providers

A new open-source tool called onWatch enables developers to monitor and predict API quota usage across OpenAI, Anthropic, GitHub Copilot, and two other providers—all from a single dashboard. With real-time alerts, historical analytics, and zero telemetry, it addresses critical gaps in provider-native dashboards.

Open-Source Tool onWatch Revolutionizes AI API Quota Management Across Major Providers

Developers relying on multiple AI coding APIs have long faced a fragmented and reactive experience when managing usage quotas. With each provider—OpenAI, Anthropic, GitHub Copilot, Synthetic, and Z.ai—offering isolated dashboards that show only live snapshots, users often encounter unexpected throttling mid-task. Enter onWatch, a new open-source Go-based tool that aggregates, analyzes, and visualizes API quota usage across five leading AI providers, offering unprecedented control and foresight.

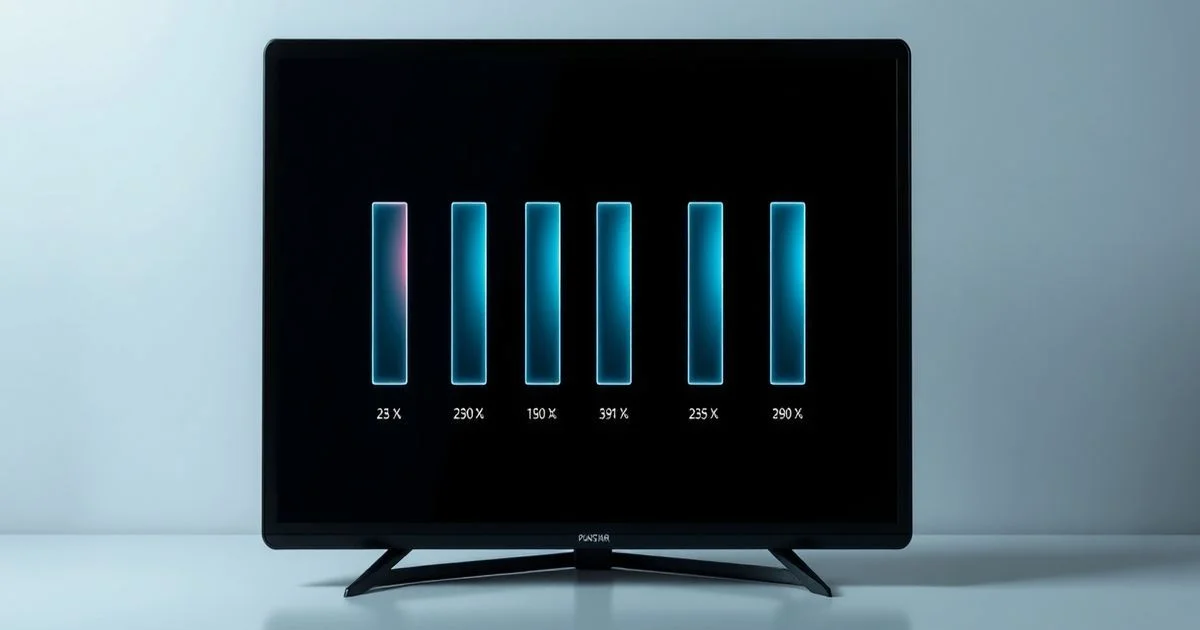

Created by developer prakersh and released under the GPL-3.0 license, onWatch is a lightweight, 13 MB binary that runs locally on user machines, polling each API’s quota endpoints every 60 seconds. All data is stored in a local SQLite database, ensuring complete privacy with zero telemetry. The tool then serves a responsive web dashboard accessible via any modern browser, and can be installed as a Progressive Web App (PWA) for offline access. Unlike proprietary dashboards, onWatch provides historical usage trends spanning one hour to 30 days, live countdowns to quota resets, and predictive rate projections that warn users before they hit limits.

One of the most critical features is its configurable alert system. Users can set thresholds—for example, triggering an email or push notification when 80% of a quota is consumed—enabling proactive workload redistribution. This is especially valuable in enterprise environments where AI-powered code generation tools like GitHub Copilot or Anthropic’s Claude Code are mission-critical. According to user reports on Reddit, the tool has prevented mid-development throttling for teams using multiple models simultaneously, significantly improving workflow continuity.

While cloud providers like Google Cloud Platform offer API monitoring solutions, as detailed in OneUptime’s guide, these systems are often tied to specific cloud ecosystems and lack cross-provider compatibility. onWatch fills this void by acting as a universal aggregator, enabling developers to compare usage patterns across Anthropic’s Claude, OpenAI’s GPT models, and GitHub’s Codex without switching platforms or relying on proprietary APIs.

The tool’s architecture is deliberately minimal: it requires no external server, no cloud dependency, and no API key storage beyond the user’s local machine. This makes it ideal for security-conscious teams and individuals working with sensitive codebases. The entire codebase is publicly available on GitHub, allowing for full auditability—a rare and commendable feature in the AI tooling space, where opaque services often dominate.

Although sources like Zhihu discussions focus on model releases such as GPT-5 or theoretical comparisons between open-source AI frameworks, they overlook the foundational infrastructure challenges developers face daily. onWatch addresses this practical gap, transforming API quota management from a reactive scramble into a strategic, data-driven process.

With Docker support and plans to expand to additional providers, onWatch is poised to become the de facto standard for AI API observability. As organizations scale their use of generative AI tools, tools like this will be essential—not for building models, but for ensuring they remain reliably available when needed most.