DDR5 RDIMM Prices Surpass NVIDIA 3090 per GB, Sparking Hardware Dilemma

As DDR5 RDIMM memory prices surge beyond the cost per gigabyte of NVIDIA RTX 3090 GPUs, tech builders face a startling choice: invest in raw memory or compute-capable graphics cards. The anomaly highlights broader supply chain distortions in the post-pandemic semiconductor market.

Across the global hardware ecosystem, an unprecedented economic inversion has emerged: the cost of DDR5 Registered DIMMs (RDIMMs) now exceeds that of NVIDIA RTX 3090 GPUs on a per-gigabyte basis. According to user reports on the r/LocalLLaMA subreddit, stacking high-capacity RDIMMs to achieve terabytes of system memory has become as expensive — or more so — than assembling multiple RTX 3090s, despite the fact that RDIMMs provide no computational power whatsoever.

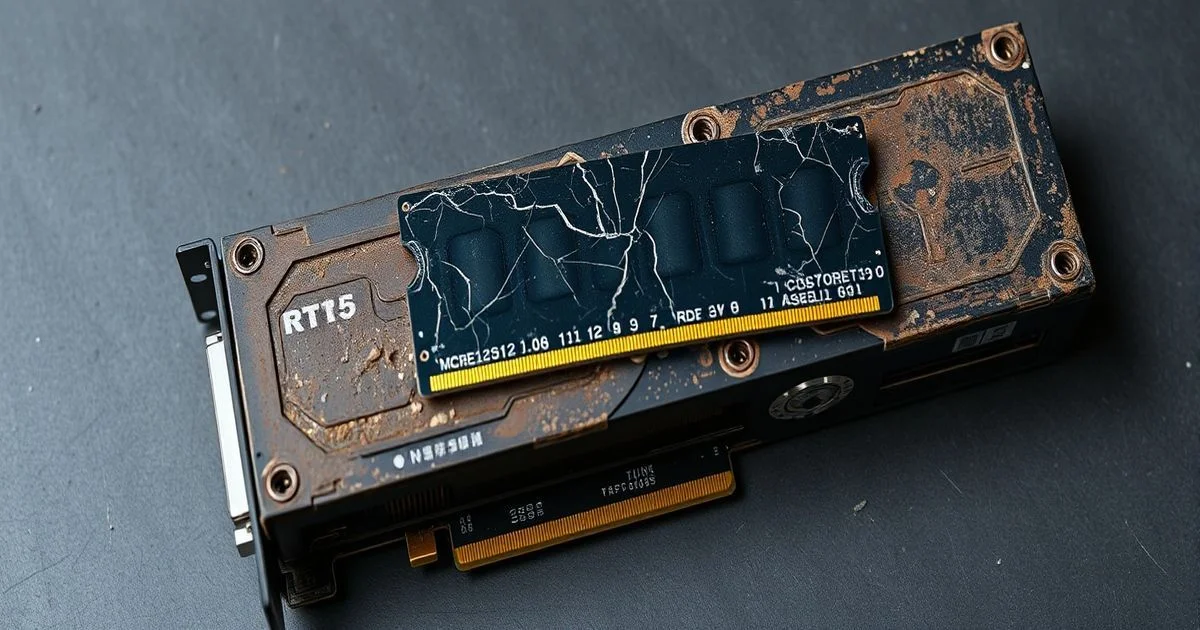

This anomaly, first flagged by a user identifying as /u/No_Afternoon_4260, has sparked heated debate among AI researchers, data center engineers, and high-end PC builders. The RTX 3090, a consumer-grade GPU released in 2020 with 24GB of GDDR6X memory, currently trades on the secondary market at roughly $800–$1,000 per unit. Meanwhile, a single 128GB DDR5 RDIMM — the type used in servers and high-end workstations — now retails for upwards of $1,200, meaning that 24GB of DDR5 RDIMM memory can cost nearly as much as an entire 3090 GPU. When scaled to multi-TB memory configurations required for large language model training or in-memory databases, the disparity becomes economically jarring.

The root cause lies in a confluence of supply chain disruptions, speculative hoarding, and manufacturing bottlenecks. DDR5 RDIMMs are primarily produced for enterprise and server markets, where demand surged during the AI boom of 2022–2024. Unlike consumer GDDR6X, which benefits from economies of scale and mass production for gaming GPUs, RDIMMs require specialized chipsets, ECC circuitry, and rigorous validation — all of which inflate costs. Meanwhile, NVIDIA’s 3090s, though discontinued, remain abundant on the used market due to mass upgrades to the 40-series, driving down prices through secondary-market saturation.

For AI practitioners, this inversion raises a fundamental question: Is it more efficient to buy memory and rely on CPU-based inference, or to invest in GPUs and optimize for parallel compute? One user noted that a 1TB DDR5 RDIMM stack could cost over $10,000, while three RTX 3090s (72GB total VRAM) cost roughly $2,700 — offering not just storage, but 36 TFLOPS of FP16 compute. "You’re paying for a warehouse, not a factory," remarked one data scientist in the Reddit thread. "The RDIMMs are just shelves. The 3090s are shelves that also have robots on them."

Industry analysts suggest this trend may be temporary. As DDR5 production ramps up and enterprise demand stabilizes, RDIMM prices are expected to normalize by late 2025. However, the incident underscores a broader fragility in the hardware supply chain: when memory and compute become misaligned in pricing, innovation stalls. Startups and academic labs, already constrained by budgets, may be forced to abandon memory-intensive workloads or pivot to cloud-based solutions.

Meanwhile, hardware vendors are quietly adjusting their product roadmaps. Companies like AMD and Intel are accelerating development of HBM3E-integrated CPUs and next-gen memory controllers to reduce reliance on discrete RDIMMs. The market, in effect, is signaling that raw memory bandwidth alone is no longer sufficient — the future belongs to integrated compute-memory architectures.

For now, the choice remains stark: pay for storage without intelligence, or pay for intelligence that happens to come with storage. In a world increasingly defined by data, the answer may not be about what’s cheaper — but what’s smarter.