AMD NPU Breakthrough: First Full Llama 3.2 1B Inference on Linux Without CPU/GPU Fallback

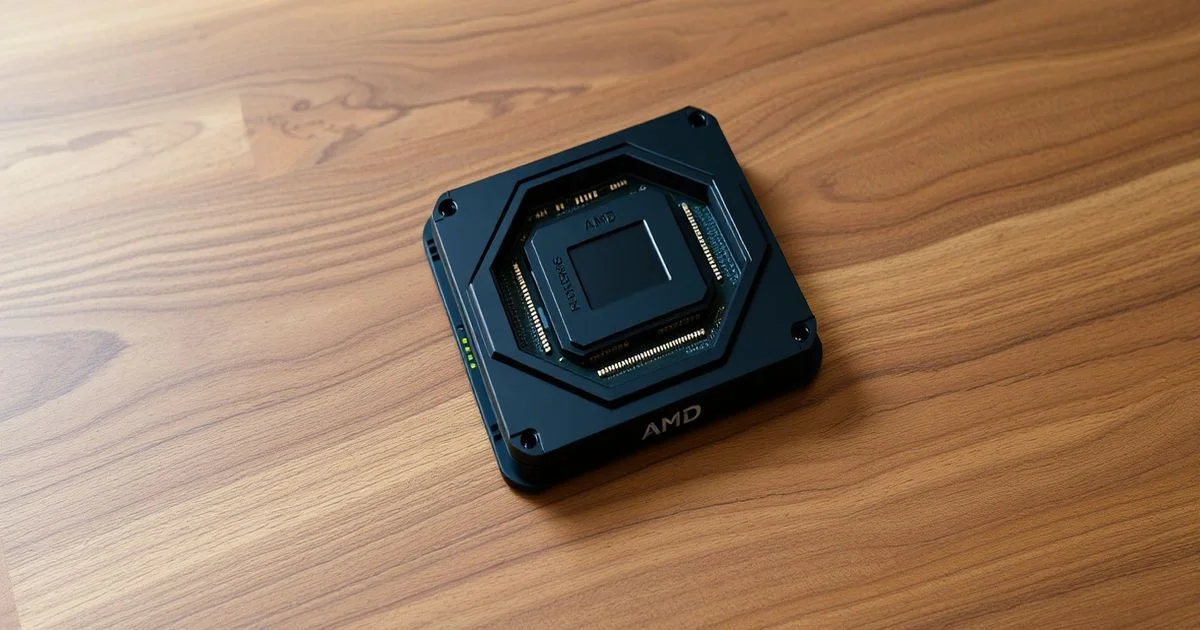

A groundbreaking technical achievement demonstrates the first fully native Llama 3.2 1B inference on an AMD NPU under Linux, bypassing traditional GPU and CPU reliance. The feat, achieved using AMD’s IRON framework and XDNA2 hardware, reveals both the potential and software bottlenecks of next-gen AI accelerators.

AMD NPU Breakthrough: First Full Llama 3.2 1B Inference on Linux Without CPU/GPU Fallback

summarize3-Point Summary

- 1A groundbreaking technical achievement demonstrates the first fully native Llama 3.2 1B inference on an AMD NPU under Linux, bypassing traditional GPU and CPU reliance. The feat, achieved using AMD’s IRON framework and XDNA2 hardware, reveals both the potential and software bottlenecks of next-gen AI accelerators.

- 2AMD NPU Breakthrough: First Full Llama 3.2 1B Inference on Linux Without CPU/GPU Fallback In a landmark development for open-source AI acceleration, an independent researcher has successfully run the Meta Llama 3.2 1B model entirely on an AMD Ryzen AI Max+ 395’s NPU under Linux—marking the first publicly documented instance of a large language model operating without any CPU or GPU fallback on this platform.

- 3The achievement, detailed in a Reddit post by user /u/SuperTeece, leverages AMD’s IRON framework and the XDNA2 architecture to execute every computational component—including attention mechanisms, GEMM operations, RoPE embeddings, and KV cache management—directly on the NPU.

psychology_altWhy It Matters

- check_circleThis update has direct impact on the Yapay Zeka Araçları ve Ürünler topic cluster.

- check_circleThis topic remains relevant for short-term AI monitoring.

- check_circleEstimated reading time is 4 minutes for a quick decision-ready brief.

AMD NPU Breakthrough: First Full Llama 3.2 1B Inference on Linux Without CPU/GPU Fallback

In a landmark development for open-source AI acceleration, an independent researcher has successfully run the Meta Llama 3.2 1B model entirely on an AMD Ryzen AI Max+ 395’s NPU under Linux—marking the first publicly documented instance of a large language model operating without any CPU or GPU fallback on this platform. The achievement, detailed in a Reddit post by user /u/SuperTeece, leverages AMD’s IRON framework and the XDNA2 architecture to execute every computational component—including attention mechanisms, GEMM operations, RoPE embeddings, and KV cache management—directly on the NPU. This milestone signals a potential paradigm shift in how AI inference can be decentralized on consumer-grade hardware.

The system, built on Fedora 43 with kernel 6.18.8, utilized the official Meta Llama 3.2 1B weights and achieved a sustained decode rate of 4.4 tokens per second. While modest compared to GPU-based inference speeds, the results are significant because they prove the NPU’s hardware capability is not the limiting factor. The AMD NPU, validated at 51.0 TOPS via xrt-smi, demonstrates raw computational power comparable to mid-tier AI accelerators. The bottleneck, as profiling revealed, lies in software: 75% of inference time is consumed by 179 kernel dispatches per token, each averaging 1.4ms in overhead. This indicates that the true challenge is not hardware but compiler optimization, operator fusion, and runtime efficiency.

The stack required meticulous assembly: the in-tree AMDxdna driver (v0.1) was incompatible, so the researcher compiled the out-of-tree v1.0.0 driver from AMD’s GitHub repository. Additionally, XRT 2.23, mlir-aie v1.2.0, and the IRON framework were built from source. Complications arose from GCC 15’s linker issues and LLVM version mismatches, requiring manual environment variables and symbolic links to bridge compatibility gaps. The first run required nearly 10 minutes of kernel compilation, but subsequent runs were cached, demonstrating the feasibility of practical deployment with proper toolchain automation.

Notably, the system showed consistent decode performance across varying prompt lengths—4.4 tok/s at 13 tokens and 4.34 tok/s at 2048 tokens—while prefill speeds scaled dramatically from 19 to 923 tokens per second. This asymmetry confirms that the NPU excels at parallelized context processing but suffers from high per-token dispatch latency. For context, the same machine ran Qwen3-32B (32x larger) at 6–7 tok/s on the GPU via Vulkan, underscoring that software inefficiencies—not hardware limitations—are the primary constraint.

This breakthrough carries broader implications for Linux-based AI ecosystems. Unlike proprietary systems reliant on NVIDIA’s CUDA, this achievement opens a path for AMD’s NPU to become a viable, independent inference engine for edge AI, embedded systems, and privacy-sensitive applications. The fact that the NPU operates independently means it could run LLM inference while the GPU handles graphics or other compute tasks—enabling true heterogeneous computing on consumer laptops.

While the current implementation is a proof-of-concept requiring deep technical expertise, it lays the foundation for future frameworks that could democratize NPU-based AI. The researcher has published a three-part technical walkthrough and welcomes community collaboration. As AMD continues to invest in open-source AI tooling, this milestone may be remembered as the moment Linux-based AI acceleration moved from theoretical possibility to tangible reality.

Editor’s Note: The project was conducted with AI-assisted research by Ellie (Claude Opus 4.6), with hardware and editorial guidance from TC. The team has emphasized transparency and invites technical critique to ensure accuracy and prevent misinformation.

Verification Panel

Source Count

1

First Published

22 Şubat 2026

Last Updated

22 Şubat 2026