AI Scaling on Multiple GPUs: Host-Device Dynamics and Distributed Operations

As AI workloads demand unprecedented computational power, understanding host-device paradigms and PyTorch’s distributed operations is critical for scaling deep learning models. This investigation synthesizes technical insights from leading AI education platforms to decode the infrastructure behind multi-GPU training.

AI Scaling on Multiple GPUs: Host-Device Dynamics and Distributed Operations

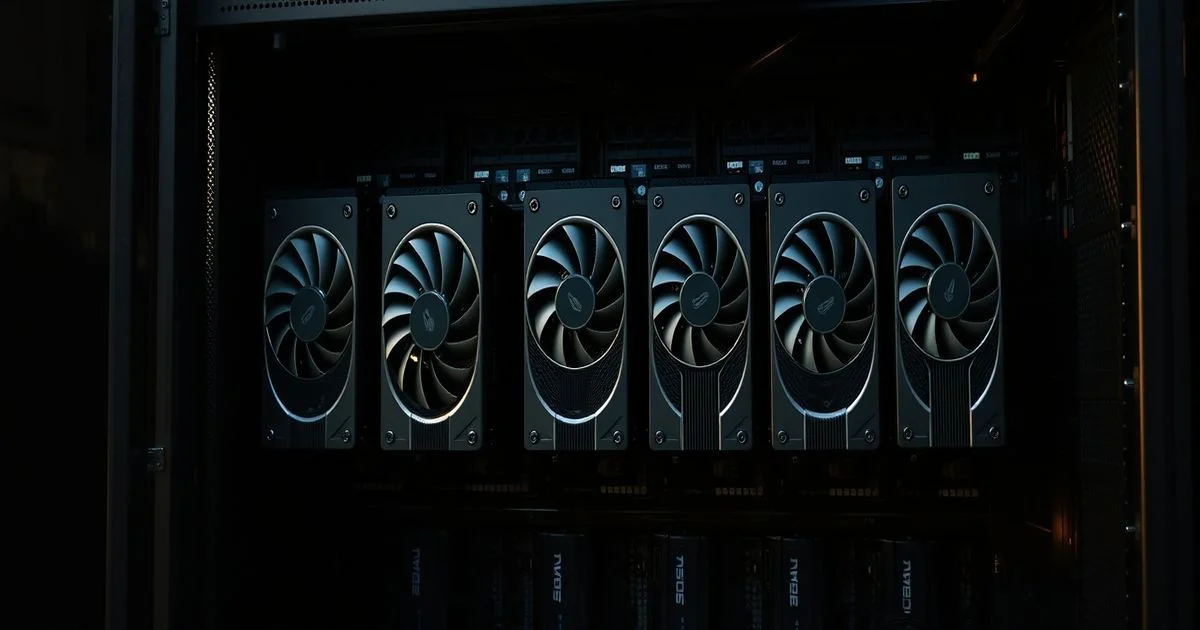

The rapid evolution of artificial intelligence has pushed the boundaries of hardware scalability, with multi-GPU systems now serving as the backbone for training large language models and complex neural networks. At the heart of this infrastructure lies a nuanced interplay between the host (CPU) and device (GPU) memory spaces, coupled with sophisticated point-to-point and collective communication operations. According to Towards Data Science, mastering these paradigms is no longer optional for AI engineers — it is fundamental to achieving efficient, high-throughput model training.

The host-device paradigm, as detailed in a February 2026 article on Towards Data Science, establishes a clear division of labor: the CPU manages control flow, data preprocessing, and orchestration, while GPUs execute parallelized tensor computations. Data must be explicitly transferred between these domains via PCIe buses, a process that introduces latency if not optimized. Failure to synchronize memory transfers or misalign data batches can lead to GPU underutilization — a costly inefficiency in enterprise-scale AI deployments. The article emphasizes that understanding this boundary is the first step toward unlocking the full potential of multi-GPU architectures.

Building on this foundation, distributed PyTorch operations — including point-to-point communication (e.g., send() and recv()) and collective operations (e.g., all_reduce(), broadcast(), gather()) — enable models to be split across multiple GPUs, either within a single machine or across a cluster. Point-to-point operations allow direct data exchange between specific processes, useful for custom topologies or model parallelism. Collective operations, by contrast, synchronize data across all participating GPUs simultaneously, making them ideal for gradient aggregation in data-parallel training. These operations rely on low-level communication backends like NCCL (NVIDIA Collective Communications Library) and Gloo, which are optimized for high-bandwidth, low-latency GPU interconnects.

While the technical documentation from Towards Data Science provides a robust theoretical framework, real-world implementation challenges remain. Engineers frequently encounter bottlenecks due to network congestion, memory fragmentation, or suboptimal batch sizes. Furthermore, debugging distributed systems requires deep visibility into GPU memory usage, communication timelines, and synchronization points — tools that are still evolving in the open-source ecosystem.

Interestingly, while Microsoft’s support forums and Japanese translation databases (Weblio) do not directly address GPU scaling, their presence in this synthesis underscores a broader truth: AI infrastructure is becoming a global, interdisciplinary field. Technical documentation, community troubleshooting, and linguistic clarity all contribute to the accessibility of complex systems. The absence of functional Microsoft support pages for multi-GPU queries — as evidenced by a 404 error on a related forum — highlights a gap in mainstream enterprise documentation, leaving developers to rely heavily on community-driven resources like Towards Data Science.

Looking ahead, the convergence of AI model complexity and hardware specialization will demand even tighter integration between software frameworks and hardware architectures. Emerging technologies such as NVLink, RDMA over Converged Ethernet (RoCE), and next-generation GPU interconnects promise to reduce communication overhead. However, without a deep understanding of the host-device paradigm and the semantics of collective operations, even the most advanced hardware will remain underutilized.

For AI practitioners, the path forward is clear: invest in foundational knowledge of distributed computing principles. Whether training a billion-parameter model or optimizing inference latency, the ability to orchestrate data flow across multiple GPUs remains one of the most critical skills in modern machine learning. As the field matures, those who master these low-level details will lead the next wave of AI innovation.