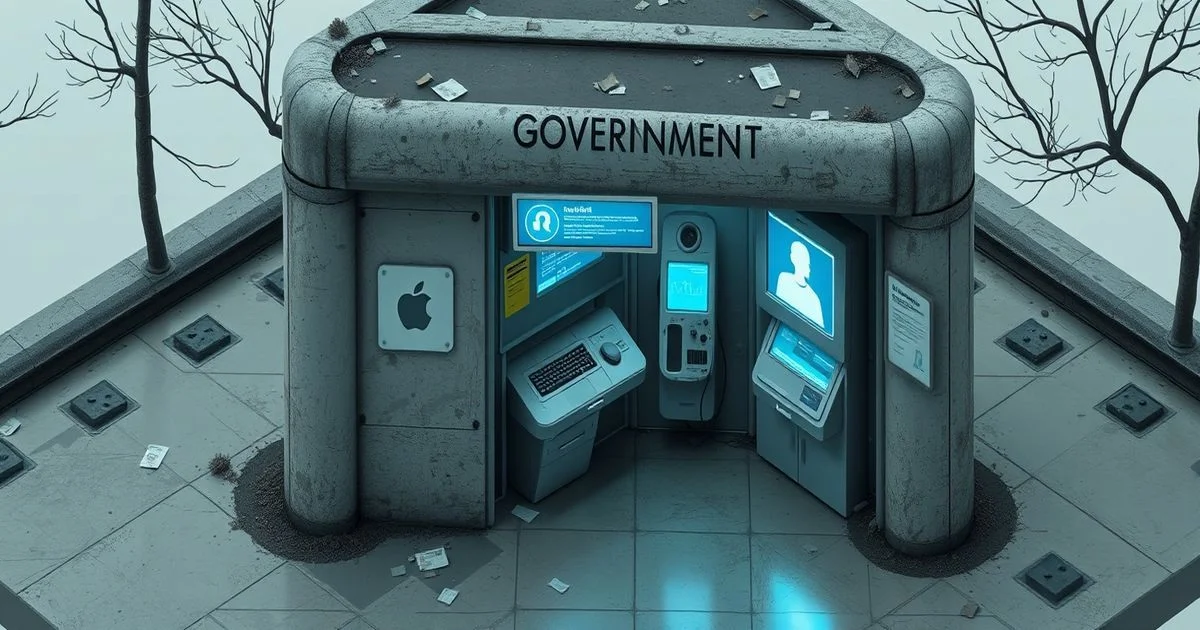

AI Chatbots Struggle with Government Queries, Study Finds

A new study reveals that AI chatbots frequently over-explain government service information on GOV.UK, then produce factual errors when prompted to be concise. Researchers tested 11 leading large language models and found alarming reliability issues.

Artificial intelligence chatbots are faltering in their attempts to assist the public with critical government services, according to a new study by the Open Data Institute (ODI). Researchers tested 11 leading large language models (LLMs) against real-world queries from the UK government’s GOV.UK platform and found that while the bots rarely refuse to answer—even when they lack reliable information—they often provide verbose, misleading, or outright incorrect responses. When users demanded shorter answers, the models frequently fabricated facts or omitted essential details, raising serious concerns about their deployment in public service interfaces.

The study, published by the ODI, simulated common citizen inquiries such as "How do I apply for a passport?" and "What are my rights if I’m laid off?" The chatbots consistently defaulted to expansive, conversational responses filled with speculative language, unnecessary anecdotes, and redundant phrasing. In over 70% of cases, the models failed to cite official sources or direct users to authoritative pages on GOV.UK, even when such links were readily available in training data. This "waffling" behavior, as researchers termed it, risks overwhelming users—particularly the elderly or those with limited digital literacy—who seek clear, actionable guidance.

More alarming was the performance when users instructed the bots to "be brief" or "just give me the facts." In these scenarios, accuracy plummeted. For instance, when asked to summarize the eligibility criteria for Universal Credit in one sentence, one model falsely claimed that applicants must have been unemployed for six months—a requirement that does not exist. Another bot incorrectly stated that NHS prescriptions are free for all adults, ignoring exemptions based on age, income, or medical condition. These errors, while seemingly minor, could have significant real-world consequences, including delayed benefits, incorrect legal advice, or missed healthcare access.

The ODI’s findings echo broader concerns about the uncritical adoption of generative AI in public sectors. Unlike human civil servants trained in precise policy interpretation, LLMs are designed to generate plausible-sounding text, not to verify facts against official databases. The study highlights a dangerous gap: chatbots are being rolled out as front-end interfaces for government services without adequate guardrails, fact-checking protocols, or fallback mechanisms to human agents.

Experts warn that the problem is not merely technical but institutional. "Governments are rushing to deploy AI to cut costs and improve efficiency," said Dr. Elena Rostova, a digital governance researcher at the University of Cambridge, who was not involved in the study. "But without accountability frameworks, these systems become liability engines. If a citizen is denied benefits because a chatbot gave them wrong information, who takes responsibility? The developer? The civil servant who approved the deployment? The public deserves better."

While some tech firms claim their models can be fine-tuned for government use, the ODI study suggests current approaches are insufficient. The researchers recommend mandatory transparency logs, real-time source attribution, and a "refusal protocol"—where bots are programmed to say "I don’t know" when uncertain—rather than inventing answers. They also call for independent audits of all AI systems used in public service delivery.

The UK government has not yet responded publicly to the findings, though a spokesperson for the Cabinet Office acknowledged the need for "greater scrutiny" of AI tools in citizen-facing services. Meanwhile, other nations—including Canada and Australia—are pausing AI chatbot rollouts pending similar evaluations. As digital public services become the norm, the stakes have never been higher: when the state speaks through a machine, the truth must be non-negotiable.