AI and Multi-GPU Computing: Decoding the Host-Device Paradigm

As artificial intelligence systems demand unprecedented computational power, the host-device paradigm has become the backbone of modern GPU-accelerated computing. This article explores how CPUs and multiple GPUs collaborate, drawing on technical insights from leading AI development platforms.

AI and Multi-GPU Computing: Decoding the Host-Device Paradigm

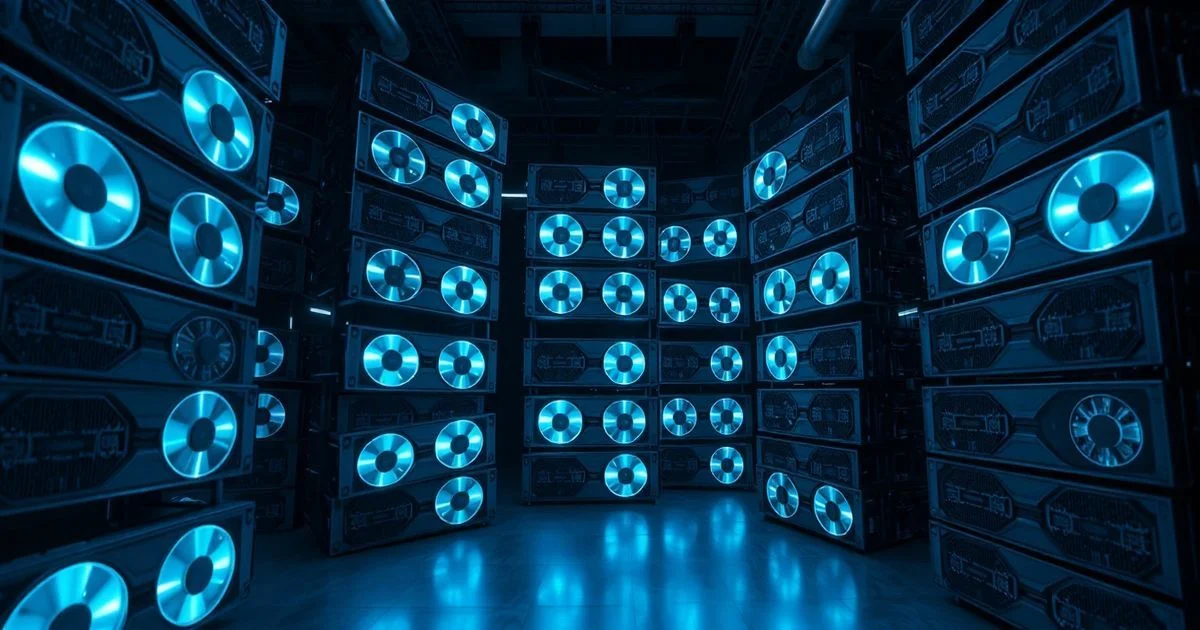

In the rapidly evolving landscape of artificial intelligence, the ability to harness the parallel processing power of multiple GPUs has become indispensable. At the core of this capability lies the host-device paradigm — a fundamental architectural model that governs how central processing units (CPUs) and graphics processing units (GPUs) communicate and coordinate tasks in high-performance computing environments. While the term "multiple" in this context refers to the deployment of several GPU units, the underlying mechanism relies on a precise division of labor between the host (CPU) and devices (GPUs), a model increasingly critical for training large language models, rendering complex simulations, and accelerating deep learning workflows.

The host-device paradigm operates on a clear separation of responsibilities. The host, typically a CPU, manages high-level orchestration: loading data, initiating kernel launches, handling memory transfers, and coordinating task scheduling across multiple GPU devices. Meanwhile, the devices — each a GPU — execute computationally intensive operations in parallel, leveraging their thousands of cores to process vast datasets simultaneously. This division is not merely a performance optimization; it is a necessity driven by the architectural differences between general-purpose CPUs and specialized, data-parallel GPUs. According to technical documentation from Towards Data Science, understanding this paradigm is essential for developers seeking to scale AI applications beyond single-GPU limitations.

When multiple GPUs are introduced into the system, the complexity increases significantly. Developers must now manage memory allocation across device boundaries, synchronize operations between GPUs, and avoid bottlenecks caused by inefficient data transfers. Frameworks like CUDA and OpenCL provide the tools to address these challenges, but their effective use requires a deep grasp of the host-device communication protocol. For instance, data must be explicitly copied from host memory to device memory before computation, and results must be explicitly returned. Failure to manage these transfers efficiently can result in performance degradation that negates the benefits of adding additional hardware.

Interestingly, the concept of "multiple" extends beyond hardware. In software ecosystems such as Angular and TYPO3, the term "multiple content" refers to the aggregation of discrete data elements into unified interfaces — a metaphorical parallel to how multiple GPUs aggregate computational power into a single, cohesive processing unit. While these use cases are distinct from GPU computing, they reflect a broader trend in system design: the need to manage and harmonize multiple components into a unified, scalable architecture. As noted in the TYPO3 Extension Repository, arranging multiple contents into tabs, sliders, or accordions mirrors the way AI frameworks distribute workloads across multiple GPU cores to achieve seamless performance.

Recent advancements in NVLink and PCIe 5.0 have dramatically improved inter-GPU bandwidth, reducing the latency that once plagued multi-GPU systems. Additionally, modern AI libraries such as PyTorch and TensorFlow now offer built-in support for multi-GPU training through data parallelism and model parallelism, abstracting much of the low-level complexity from developers. Yet, beneath these abstractions, the host-device paradigm remains unchanged. The CPU still acts as the conductor, orchestrating the symphony of parallel computation across the GPU ensemble.

For enterprises deploying AI at scale — from autonomous vehicle manufacturers to financial institutions running risk simulations — mastering the host-device paradigm is no longer optional. Misconfigurations can lead to underutilized hardware, increased energy consumption, and delayed model deployment. Conversely, optimized implementations can reduce training times from weeks to hours, unlocking new possibilities in AI research and commercial applications.

As AI continues to push the boundaries of computational demand, the host-device model will remain foundational. Future innovations may blur the lines between CPU and GPU architectures, as seen in emerging unified memory systems and AI accelerators. But for now, the clear delineation between host and device remains the bedrock of scalable, efficient AI computing.