A11yShape Empowers Low-Vision Programmers in 3D Design

A groundbreaking new tool, A11yShape, is dismantling barriers for blind and low-vision programmers, enabling independent creation and refinement of 3D models. This innovative software leverages AI and semantic organization to bridge the accessibility gap in a field traditionally reliant on visual interaction.

A11yShape Empowers Low-Vision Programmers in 3D Design

For years, the intricate world of 3D modeling has remained largely out of reach for blind and low-vision programmers. The inherent reliance on visual cues like dragging and rotating 3D objects presented a significant hurdle, effectively barring interested individuals from fields such as hardware design, robotics, and advanced coding. However, a revolutionary new prototype program, named A11yShape, is poised to fundamentally change this landscape, offering a pathway to independent 3D design for those with visual impairments.

While existing text-based tools like OpenSCAD and recent large-language-model-driven solutions that generate 3D code from natural language prompts have offered partial solutions, they often still require sighted feedback to reconcile code with its visual output. As reported by IEEE Spectrum, blind and low-vision programmers previously had to depend on colleagues to verify every iteration of a model, creating a bottleneck and limiting their autonomy. A11yShape aims to eliminate this dependency by providing accessible model descriptions, organizing designs into a semantic hierarchy, and ensuring seamless integration with screen readers.

The genesis of A11yShape lies in a direct conversation between Liang He, an assistant professor of computer science at the University of Texas at Dallas, and a low-vision classmate studying 3D modeling. Recognizing the challenges his classmate faced, He was inspired to transform coding strategies learned in a specialized 3D modeling course for blind programmers at the University of Washington into a streamlined, accessible tool. "I want to design something useful and practical for the group," He stated, emphasizing his commitment to creating a solution grounded in real-world needs rather than abstract concepts.

Reimagining Assistive 3D Design with OpenSCAD Integration

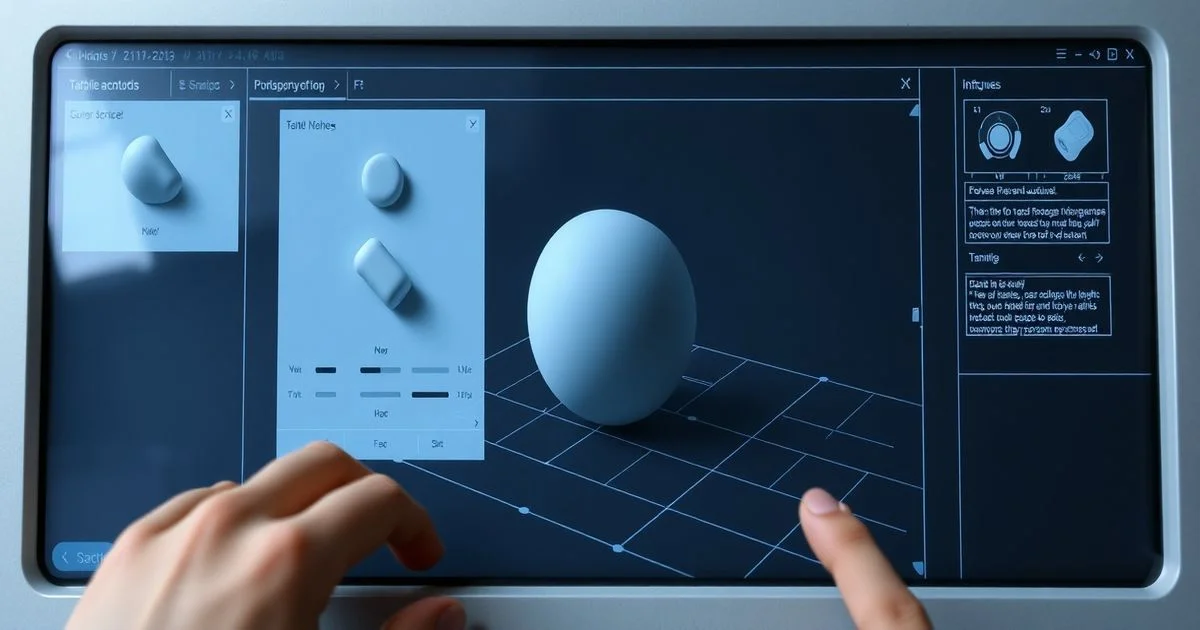

A11yShape operates by enhancing the capabilities of OpenSCAD, a popular script-based 3D modeling editor that inherently eliminates the need for mouse-driven dragging and rotating, making it a more suitable foundation for non-visual interaction. The new program integrates three synchronized application UI panels designed to provide comprehensive context for users. These include a core code editor, an AI Assistance Panel, and a Model Panel.

The AI Assistance Panel is a particularly significant innovation. Here, users can engage with advanced AI models, such as ChatGPT-4o, to receive real-time validation of design decisions and debug existing OpenSCAD scripts. This feature provides an immediate, interactive layer of support, allowing programmers to troubleshoot and refine their designs without external assistance.

A key feature of A11yShape is its ability to synchronize information across all three panels. When a user selects a piece of code or a specific component within the model, the corresponding element is highlighted in all panels, and the descriptive text is updated accordingly. This cross-referencing ensures that blind and low-vision users maintain a clear understanding of their work and how code modifications impact the 3D design at every step.

User Feedback Shapes the Accessible Interface

To assess A11yShape's efficacy, the research team conducted a study involving four participants with varying degrees of visual impairment and programming experience. The feedback gathered was instrumental in refining the tool. One participant, new to 3D modeling, remarked that the tool offered "a new perspective on 3D modeling, demonstrating that we can indeed create relatively simple structures." This sentiment highlights the program's potential to democratize access to 3D design.

However, the user feedback also revealed areas for future development. Participants noted that extensive text descriptions, while helpful, could still make grasping complex shapes challenging. Furthermore, the desire for tactile feedback or physical models to fully conceptualize designs was frequently expressed, suggesting that future iterations might benefit from integrating tactile displays or advanced 3D printing capabilities.

The accuracy of the AI-generated descriptions was rigorously tested with 15 sighted participants. On a scale of 1 to 5, these descriptions consistently scored between 4.1 and 5 for geometric accuracy, clarity, and a lack of fabricated information. This indicates a high level of reliability for everyday use, a crucial factor for building user trust.

Looking ahead, Liang He and his team are planning future enhancements based on this feedback. These may include real-time 3D printing integration and more concise AI-generated audio descriptions. Beyond its impact on professional programming communities, A11yShape is also seen as a vital tool for lowering the barrier to entry for aspiring blind and low-vision computer science learners. Stephanie Ludi, director of the DiscoverABILITY Lab at the University of North Texas, commented on the program's broader implications for the maker community, stating that "Persons who are blind and visually impaired share that interest, with A11yShape serving as a model to support accessibility in the maker community."

The research team presented A11yShape at the prestigious ASSETS conference in Denver in October, marking a significant step forward in assistive technology for the design and engineering fields.