Wan 2.2 SVI Pro with HuMo Revolutionizes AI-Generated Talking Avatars

A new AI workflow combining Wan 2.2 SVI Pro and HuMo enables long-form, non-repetitive talking animations from static images and audio, overcoming key limitations of previous tools like Infinite Talk. The system allows users to extend existing videos with synchronized speech sequences, opening new possibilities for digital storytelling and virtual presenters.

Wan 2.2 SVI Pro with HuMo Revolutionizes AI-Generated Talking Avatars

A groundbreaking advancement in AI-driven video synthesis is reshaping how digital avatars are animated with speech. According to a detailed post on Reddit’s r/StableDiffusion, a new workflow combining Wan 2.2 SVI Pro and HuMo enables the creation of extended, naturalistic talking sequences without the repetitive motion artifacts that have plagued earlier tools such as Infinite Talk. This innovation allows users to generate lifelike animations from a single image and an audio file, making it possible to produce long-form video content featuring AI-generated characters speaking with fluid, non-repeating facial and head movements.

The system, accessible via a model hosted on CivitAI, is designed for both standalone animation and video extension. Users can load a static image—such as a portrait or character design—and pair it with an audio track containing speech. The HuMo component then drives dynamic facial animation synchronized precisely with the voice, while Wan 2.2 SVI Pro ensures high-fidelity temporal consistency across frames. Unlike previous solutions that recycled short animation loops, this combination produces unique motion patterns throughout the entire duration of the audio, significantly enhancing realism.

One of the most compelling features of this workflow is its ability to extend pre-existing videos. For example, if a user has a 20-second video generated with Stable Diffusion Video (SVI) that ends with a silent character, they can now append a speaking sequence by providing an audio file that contains 20 seconds of silence (or ambient music) followed by the desired speech. The system will seamlessly continue the video from frame 20 onward, animating the character to speak without disrupting the original motion. However, as the original poster cautions, the system does not currently support real-time synchronization with existing video timelines—it can only append new content after the original video ends.

This limitation means users must manually prepare audio tracks to align with their desired timing, but the trade-off is substantial: the resulting animations are far more natural and less cyclical than those produced by tools that rely on looping motion data. The technique has already drawn attention from digital content creators, educators, and developers working on virtual influencers and AI-driven customer service avatars.

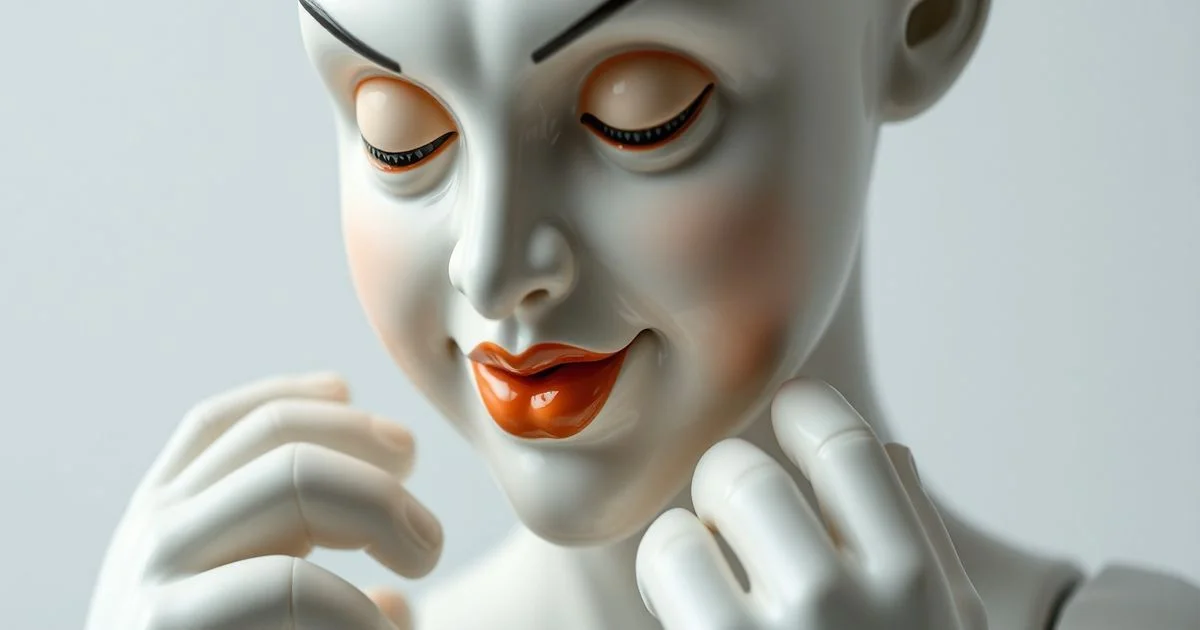

The example shared in the Reddit post, which features a static portrait animated to speak over a background of ambient music generated by ACE-Step 1.5, demonstrates the potential for hybrid media production. By combining image-to-video (i2v) models with advanced audio-driven animation systems, creators can now produce multi-layered, emotionally engaging content with minimal manual animation.

While the workflow requires technical familiarity with Stable Diffusion ecosystems and local inference environments, its open availability on CivitAI lowers the barrier to entry for hobbyists and professionals alike. Future iterations may integrate temporal synchronization capabilities, but for now, this represents one of the most sophisticated approaches to AI-powered talking head generation available outside proprietary platforms.

As AI-generated media continues to evolve, tools like Wan 2.2 SVI Pro with HuMo signal a shift from static image generation toward dynamic, speech-responsive digital personas. The implications span entertainment, education, and accessibility—offering new ways to bring digital characters to life with authentic, extended speech.