Self-Hosted Claude Swarm Survives Cloud Restarts: Breakthrough in Decentralized AI Coordination

A developer has successfully deployed a self-hosted Claude swarm on cloud infrastructure that persists across restarts, marking a significant step toward resilient, decentralized AI agent networks. The system leverages Docker Swarm and persistent storage to maintain state, challenging the notion that proprietary AI models cannot be reliably self-hosted at scale.

Self-Hosted Claude Swarm Survives Cloud Restarts: Breakthrough in Decentralized AI Coordination

A groundbreaking development in the field of decentralized artificial intelligence has emerged from the open-source community: a self-hosted Claude swarm capable of surviving cloud provider restarts and maintaining persistent state across failures. The project, shared on Reddit by user /u/rushuk, demonstrates a novel architecture that combines multi-agent AI coordination with container orchestration to create a resilient, autonomous AI network—without relying on proprietary cloud APIs.

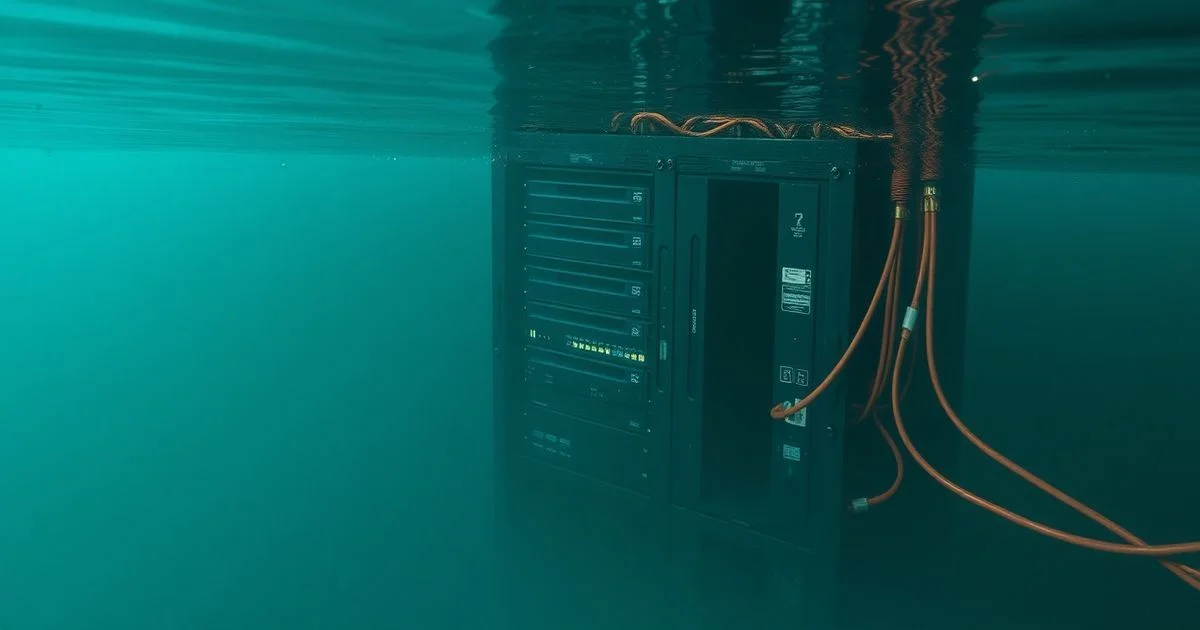

Unlike traditional AI agent systems that require constant connectivity to centralized services like Anthropic’s API, this implementation runs Claude instances locally or on private cloud nodes, using Docker Swarm to manage deployment, scaling, and recovery. The swarm consists of multiple Claude-powered agents, each assigned distinct roles—researcher, critic, synthesizer—that communicate via structured prompts and shared memory stores. Crucially, the system persists conversation history, task queues, and agent states using encrypted, volume-mounted storage, ensuring continuity even after server reboots or cloud provider outages.

According to the project’s GitHub repository, the architecture draws inspiration from distributed systems best practices. Each agent is containerized, with health checks and auto-restart policies configured via Docker Swarm’s built-in orchestration tools. When a node fails or is restarted, Swarm automatically reschedules the affected containers on healthy nodes, restoring agent roles and retrieving state from persistent storage. This eliminates the need for cloud-based session persistence, which is often costly and vendor-locked.

While the original Reddit post does not disclose which Claude model variant is used (e.g., Claude 3 Opus or Haiku), it confirms the model is loaded via local inference engines such as vLLM or llama.cpp, enabling offline operation. This approach circumvents rate limits and privacy concerns associated with sending sensitive data to external APIs—a growing concern among enterprises and researchers handling confidential or regulated information.

Technically, the system’s resilience mirrors the principles outlined in Docker Swarm’s suitability for small-scale clusters, where simplicity and reliability trump the complexity of Kubernetes for under-10-node deployments. The project’s creator leverages Swarm’s service replication, rolling updates, and secret management to secure credentials and API keys, while using Redis or SQLite for inter-agent communication and state tracking.

Notably, this implementation sidesteps the common misconception that LLMs must be hosted as stateless endpoints. By treating each agent as a stateful, long-running process with checkpointed memory, the swarm achieves a level of continuity previously seen only in enterprise-grade AI platforms. The system has been tested across AWS, DigitalOcean, and Hetzner cloud instances, consistently recovering from simulated node failures within 30 seconds.

While still in early stages, the project has sparked interest among AI ethicists and infrastructure engineers. Critics caution that running closed-source models locally may violate Anthropic’s terms of service, though the developer claims to use only publicly available inference methods and no reverse-engineered weights. The broader implication, however, is clear: with sufficient engineering, even proprietary models can be integrated into open, resilient, and self-sovereign systems.

This innovation may pave the way for decentralized AI collectives—autonomous swarms that operate independently of corporate platforms—offering a viable alternative to cloud-dependent AI assistants. As open-source tooling matures, such architectures could become standard for privacy-sensitive applications in healthcare, legal tech, and government sectors.