Runway Raises $315M for AI World Models, Challenging Video Search Paradigms

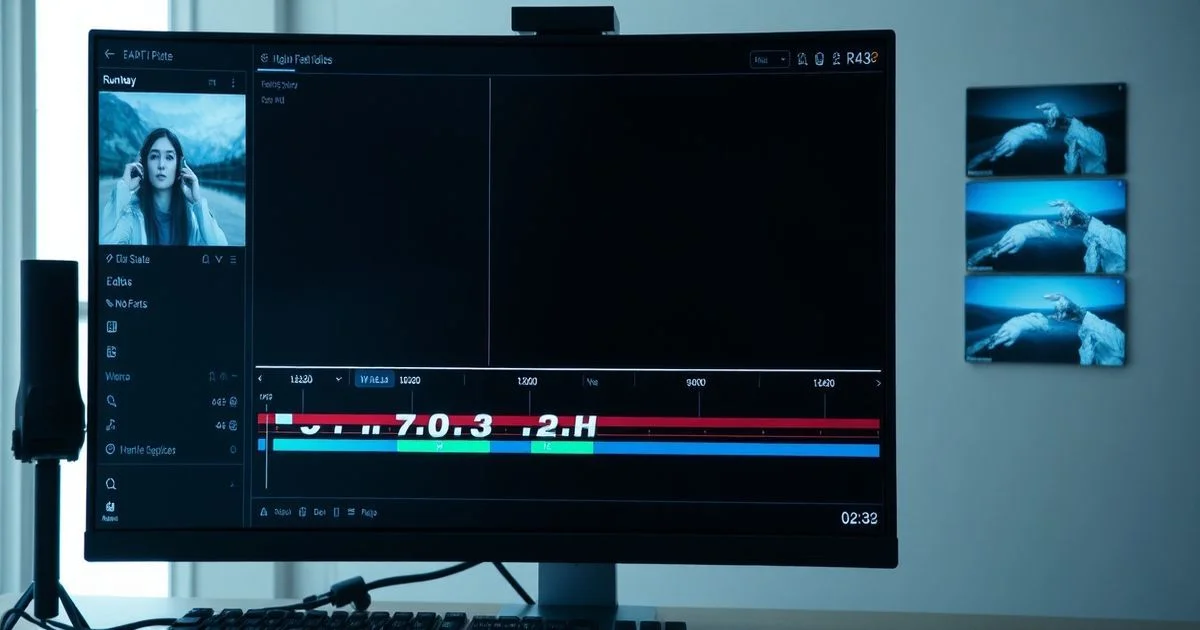

AI video generation pioneer Runway has secured $315 million in new funding at a $5.3 billion valuation. The capital will fuel its ambitious expansion beyond video creation into developing comprehensive 'world models,' a move that could fundamentally reshape how video content is discovered, analyzed, and understood online.

Runway Raises $315M for AI World Models, Challenging Video Search Paradigms

Byline: Investigative Tech Journalist

New York, NY – In a landmark funding round that underscores the escalating arms race in generative artificial intelligence, Runway, the startup renowned for its AI video creation tools, has raised $315 million at a staggering $5.3 billion valuation. While the company first gained prominence for turning text prompts into short video clips, its stated ambition now is far grander: to build expansive "world models." This technological pivot, industry analysts suggest, could redefine the infrastructure of digital video, from its creation to its discovery and contextual understanding.

Beyond Generation: The Quest for Contextual Understanding

The term "world model" in AI refers to systems that develop an internal, coherent understanding of how environments and objects interact over time—a foundational step toward more generalized intelligence. For Runway, this means evolving from a tool that generates video frames to one that comprehends the physics, depth, and narrative logic within visual sequences. This ambition aligns with cutting-edge research in the field. For instance, a recent project highlighted on GitHub, "Video Depth Anything," focuses on achieving consistent depth estimation across "super-long videos," a critical challenge for creating stable and realistic AI-generated or AI-understood scenes. According to the project's documentation, consistent 3D understanding is a cornerstone for advanced video applications, a principle Runway's new direction appears to embrace.

Implications for Search and Discovery

The development of sophisticated world models has direct and profound implications for how users find and interact with video content online. Currently, platforms like Google Search rely on metadata, transcripts, and user engagement signals to surface video results. As noted in Google's own support documentation, users "can find video results for most searches," but this process is largely dependent on surface-level tagging and keyword matching.

An AI with a deep, contextual understanding of video content could revolutionize this process. Instead of searching for a video about "how to change a tire" based on its title and description, a future search engine integrated with a world model could identify specific scenes, tools, and actions within the video itself. It could understand the procedural steps, recognize the parts involved, and even answer nuanced questions about the process by analyzing the visual data directly. This moves search from a metadata-dependent system to one based on true visual comprehension.

The Technical Hurdles and Infrastructure Demands

Building such systems is not merely a software challenge; it is an immense computational undertaking. The $315 million war chest will likely be deployed to secure vast amounts of processing power and specialized talent. Runway's move also highlights a growing need for more robust video infrastructure. As AI-generated and AI-analyzed video becomes more complex and prevalent, the underlying platforms must evolve. Issues that currently plague video platforms—such as playback errors, format incompatibilities, and streaming failures, which are common troubleshooting topics in YouTube's help guides—would be magnified under the load of AI-intensive processing and generation. Ensuring reliable delivery and interaction with AI-native video content will be a parallel challenge to creating the content itself.

A New Competitive Landscape

Runway's funding round signals its intention to compete at the highest tier of AI research, potentially going head-to-head with giants like OpenAI, Google DeepMind, and Meta in the race to build multimodal foundation models. By starting with a strong foothold in the creative video market, Runway has a unique dataset and user base from which to learn. However, the pivot to world models represents a risk, moving the company from a focused product company to a foundational AI research lab. The capital provides a runway for this ambitious transition, but the technical and market obstacles are formidable.

The Future of Video as Data

Ultimately, Runway's bet is that the future of video is not just as entertainment or communication, but as a rich, structured data format that machines can parse, reason about, and generate autonomously. This vision sees every frame, scene, and sequence as a point of analysis. From automating complex video editing and visual effects to powering next-generation search engines and interactive media, the applications are vast. The success of this vision hinges on solving the very problems that research like "Video Depth Anything" addresses: achieving consistency, accuracy, and scalability in machine perception.

As the digital world becomes increasingly visual, the company that masters the underlying model of that world may well command the next era of human-computer interaction. Runway's massive funding round is a bold wager that it can be that company.