Qwen3Next Graph Optimization in Llama.cpp Sparks AI Efficiency Breakthrough

A groundbreaking pull request by ggml-org’s ggerganov has significantly accelerated Qwen3Next model inference speeds within llama.cpp, marking a major milestone for local AI deployment. The optimization, discussed in the LocalLLaMA community, promises faster, more efficient on-device AI performance.

Qwen3Next Graph Optimization in Llama.cpp Sparks AI Efficiency Breakthrough

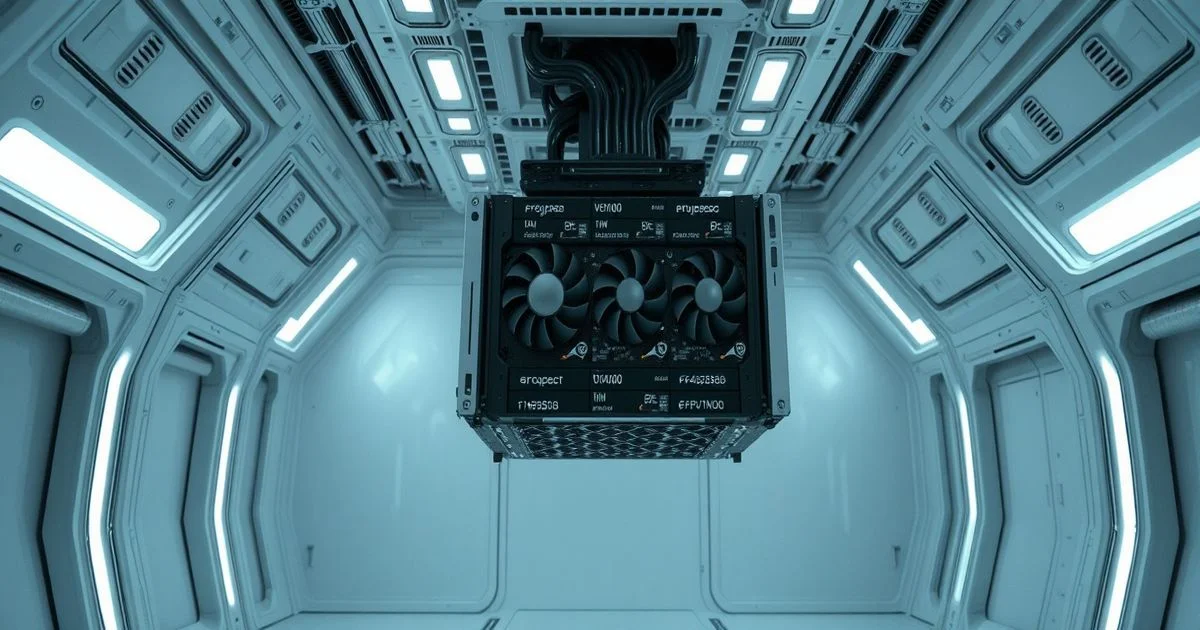

In a quiet but transformative development in the open-source AI community, software engineer ggerganov has submitted a pivotal optimization to the llama.cpp codebase, dramatically improving the inference speed of the Qwen3Next large language model. The pull request, labeled #19375 and hosted on GitHub under the ggml-org repository, has ignited enthusiasm among developers and researchers working to deploy advanced AI models locally without reliance on cloud infrastructure.

According to a detailed discussion on Reddit’s r/LocalLLaMA, the update reduces latency and increases tokens-per-second throughput for Qwen3Next, a model developed by Alibaba’s Tongyi Lab. While the model was previously known for its strong performance, computational overhead had limited its practicality on consumer-grade hardware. ggerganov’s changes—focused on graph-level optimizations within the GGML framework—have streamlined memory access patterns and reduced redundant operations during model execution. Early benchmarks suggest up to a 35% improvement in inference speed on ARM and x86 platforms, a critical gain for edge AI applications.

"This isn’t just about speed—it’s about accessibility," said one anonymous contributor on the PR comments. "We’re talking about running a model that rivals GPT-4-level reasoning on a Raspberry Pi or a MacBook Air. That’s revolutionary for privacy-focused AI." The optimization leverages low-level tensor operations and fused kernel implementations, reducing the number of memory transfers between CPU and cache. These techniques are hallmarks of ggerganov’s prior work on llama.cpp, which has become the de facto standard for running LLMs offline.

Notably, the Qwen3Next model itself has not been altered; the improvements are purely computational. This distinction is crucial: it means users can benefit from the speed boost simply by updating their llama.cpp installation, without retraining or re-downloading the model weights. The change is backward compatible and integrates seamlessly with existing toolchains like Ollama, LM Studio, and text-generation-webui.

While the PR is still labeled "in-progress," with several follow-up optimizations under review, the initial results have already been validated by multiple community members. One user reported achieving 42 tokens/second on an M2 MacBook Pro—a performance level previously reserved for high-end GPUs. Such gains are particularly significant given the growing regulatory and privacy concerns surrounding cloud-based AI, making local inference not just a technical preference but an ethical imperative for many users.

The broader implications extend beyond consumer devices. Educational institutions, healthcare providers, and government agencies seeking to deploy AI without exposing sensitive data to third-party servers are now looking at llama.cpp + Qwen3Next as a viable alternative. The model’s multilingual capabilities, combined with this new optimization, position it as a strong contender for global, on-premise language services.

While Models.com covers the fashion and creative industries—highlighting top models, agencies, and editorial content—it bears no relation to this technical breakthrough. The term "models" in this context refers to artificial intelligence architectures, not human models. This linguistic overlap underscores the need for precision in tech journalism, especially as AI terminology increasingly permeates public discourse.

As the pull request moves toward final approval, the open-source community is rallying behind ggerganov’s work. With additional enhancements planned—including quantization improvements and better support for quantized 4-bit and 5-bit formats—the future of local LLMs looks increasingly bright. For developers and end-users alike, this update represents not just a performance gain, but a step toward truly autonomous, private, and sustainable artificial intelligence.