PaddleOCR-VL Integrated into llama.cpp, Boosting Open-Source Multilingual OCR Capabilities

The PaddleOCR-VL model, a lightweight 0.9B parameter multilingual OCR system, has been successfully ported to llama.cpp, enabling on-device text extraction across dozens of languages. Developers and researchers are praising its accuracy and low hardware demands, marking a milestone for decentralized AI applications.

In a significant development for open-source artificial intelligence, the PaddleOCR-VL model has been integrated into the llama.cpp framework, enabling efficient, on-device optical character recognition (OCR) across a wide array of languages. The integration, announced in the llama.cpp v0.9.0 release, allows users to run a state-of-the-art vision-language OCR model without relying on cloud services, enhancing privacy and accessibility for developers, researchers, and end-users alike.

PaddleOCR-VL, originally developed by PaddlePaddle, is a multimodal model trained to recognize and extract text from images using both visual and linguistic context. Its integration into llama.cpp—previously known primarily for running large language models (LLMs) locally—marks a strategic expansion of the framework’s capabilities beyond text generation into multimodal perception. With a compact size of just 0.9 billion parameters, the model is designed to operate efficiently on consumer-grade hardware, including laptops and single-board computers like the Raspberry Pi 5, without requiring high-end GPUs.

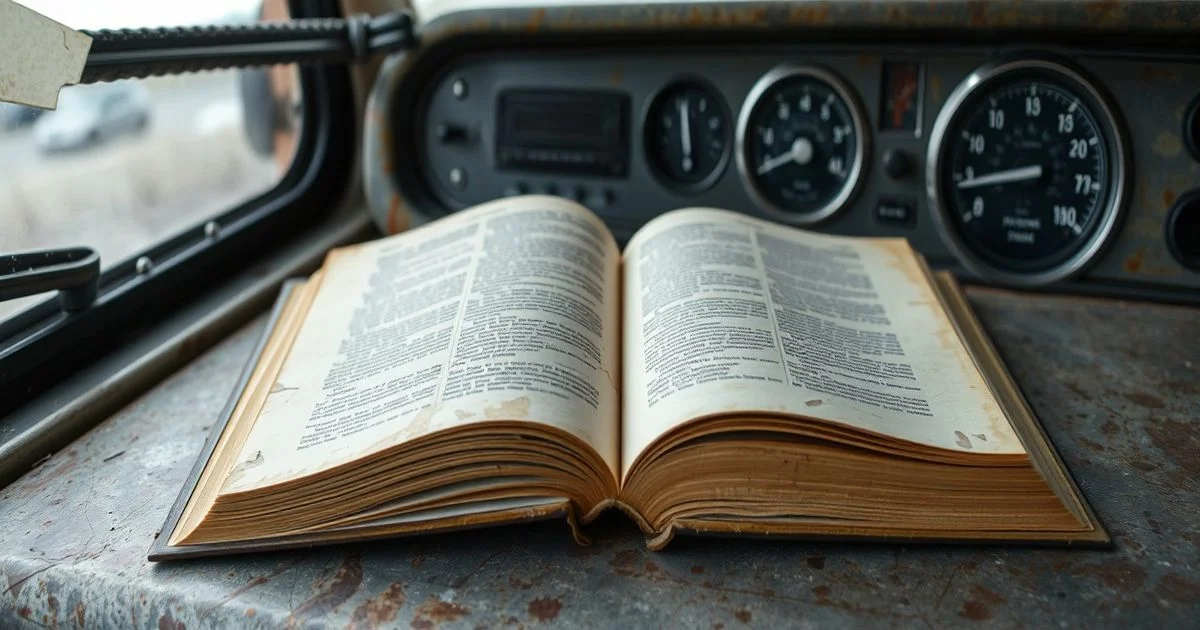

According to a post on the r/LocalLLaMA subreddit by user /u/PerfectLaw5776, PaddleOCR-VL is the most accurate open-source multilingual OCR system they have tested to date. The model demonstrates exceptional performance in recognizing Latin, Cyrillic, Arabic, Chinese, Japanese, and Korean scripts, even in low-resolution or skewed images. The user also shared a collection of pre-converted GGUF model files on Hugging Face, making deployment significantly easier for non-experts. GGUF, the quantized format used by llama.cpp, allows for efficient memory usage while preserving model accuracy, a critical factor for edge computing applications.

This development holds particular importance for industries reliant on document digitization, such as legal, financial, and archival services. Law firms, for instance, can now process scanned contracts or handwritten notes locally, avoiding the privacy risks associated with uploading sensitive documents to third-party cloud OCR services. Similarly, researchers working in multilingual environments—such as those analyzing historical manuscripts or public signage in diverse regions—can now perform automated text extraction without internet connectivity.

Community feedback has been overwhelmingly positive. Early adopters report that the model outperforms other open-source alternatives like Tesseract OCR in multilingual contexts, particularly with mixed-script images. Unlike Tesseract, which requires separate language models and extensive preprocessing, PaddleOCR-VL handles context-aware recognition natively, leveraging its vision-language architecture to infer text from layout and semantic cues. One user noted that the model correctly interpreted a mixed English-Chinese street sign where other tools failed entirely.

While the integration is still in its early stages, the open nature of both PaddleOCR-VL and llama.cpp encourages rapid iteration. Developers are already exploring ways to combine this OCR capability with LLMs in the same framework, enabling end-to-end document understanding: extract text, then analyze or summarize it—all locally. Such a pipeline could revolutionize mobile applications for real-time translation, accessibility tools for the visually impaired, and automated data entry systems.

Looking ahead, the success of this integration may catalyze further collaborations between the vision and language AI communities. As more models are ported to GGUF and llama.cpp, the ecosystem is evolving into a unified platform for on-device multimodal AI. For now, the combination of PaddleOCR-VL and llama.cpp represents a major leap forward in democratizing high-performance OCR technology, proving that powerful AI need not be centralized—or expensive—to be effective.