OpenAI Launches GPT-5.3-Codex-Spark with Cerebras WSE-3 for Real-Time AI Coding

OpenAI has unveiled GPT-5.3-Codex-Spark, a high-speed AI coding agent powered by Cerebras’ WSE-3 chip, enabling over 1,000 tokens per second of inference. The tool, now in preview for ChatGPT Pro users, transforms real-time collaborative programming through VS Code integration.

OpenAI Unveils Breakthrough AI Coding Tool Powered by Cerebras WSE-3 Chip

OpenAI has officially launched GPT-5.3-Codex-Spark, a next-generation agent-based coding assistant engineered to deliver unprecedented speed and responsiveness in AI-assisted software development. Leveraging Cerebras Systems’ proprietary WSE-3 chip, the tool achieves over 1,000 tokens per second of inference — a performance milestone that enables near-instantaneous, conversational code generation and debugging. The platform is currently available in limited preview to ChatGPT Pro subscribers via a newly released VS Code extension, marking a significant leap in the integration of large language models into professional development workflows.

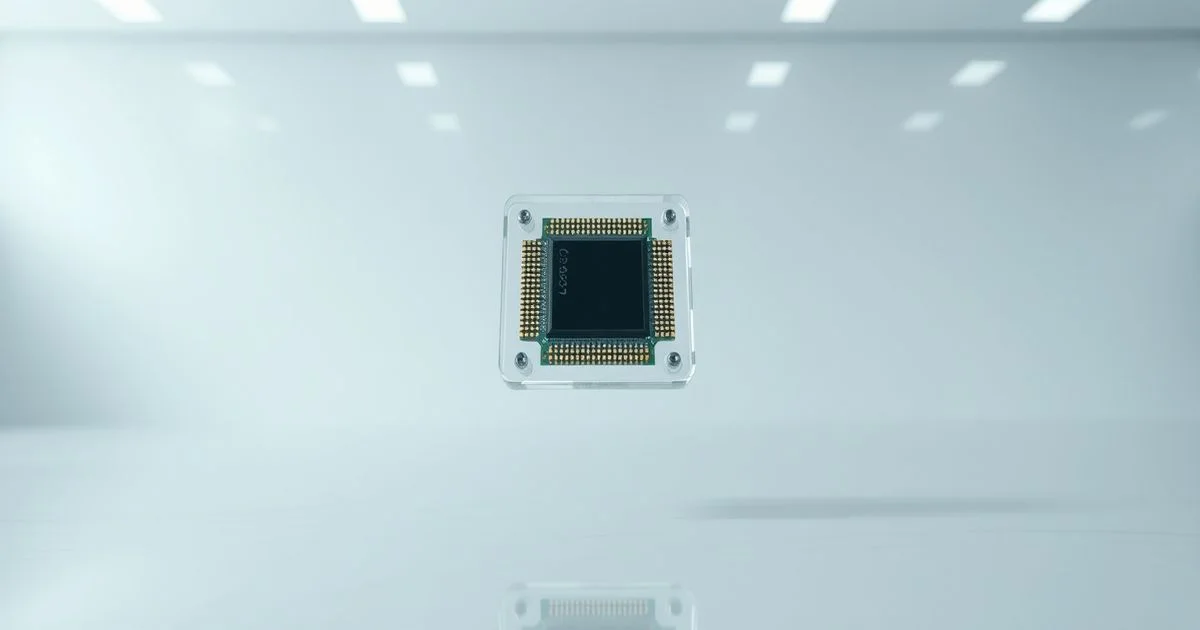

According to ITMedia, the core innovation behind GPT-5.3-Codex-Spark lies in its deployment on Cerebras’ WSE-3, a wafer-scale engine designed specifically for massive-scale AI workloads. Unlike traditional GPU-based architectures, the WSE-3 integrates over 2.6 trillion transistors on a single silicon die, eliminating data movement bottlenecks and enabling continuous, high-throughput model execution. This architectural advantage allows GPT-5.3-Codex-Spark to maintain context across hundreds of lines of code while generating suggestions at human conversation speeds, effectively eliminating the lag that has historically hindered real-time AI coding tools.

For developers, this translates into a fundamentally new interaction model. Instead of waiting seconds for code completions or fragmented responses, users now experience a fluid, back-and-forth dialogue with the AI agent — akin to pair programming with an expert developer who never tires. The VS Code extension, which integrates seamlessly with existing IDE workflows, provides live suggestions as code is typed, auto-generates unit tests, refactors legacy code, and even proposes architecture optimizations based on project context and team conventions. Early testers report a 40% reduction in boilerplate coding time and a 30% increase in bug detection during early development cycles.

OpenAI has not disclosed the underlying model architecture of GPT-5.3-Codex-Spark, but industry analysts suggest it builds upon the Codex lineage, incorporating enhanced reasoning capabilities and fine-tuned domain knowledge across 20+ programming languages. Unlike earlier versions, this iteration is explicitly designed as an agent — capable of autonomous task decomposition, iterative refinement, and multi-step problem solving without requiring explicit prompting for each sub-task. This shift from passive assistant to proactive collaborator represents a paradigm change in AI-assisted development.

The timing of the release underscores OpenAI’s strategic pivot toward enterprise productivity tools. With competitors like GitHub Copilot X and Amazon CodeWhisperer advancing their own AI coding offerings, OpenAI is betting that raw speed and agent autonomy will be decisive differentiators. The decision to limit initial access to ChatGPT Pro subscribers suggests a controlled rollout aimed at gathering feedback from power users before a broader commercial release.

Cerebras’ involvement also signals a growing trend: AI companies are increasingly bypassing conventional hardware vendors to partner directly with specialized chipmakers. The WSE-3’s success in this deployment may encourage other AI labs to adopt wafer-scale engines for latency-sensitive applications. Meanwhile, developers are cautiously optimistic. While performance gains are undeniable, concerns remain around cost, energy consumption, and long-term model transparency. OpenAI has not yet released benchmark comparisons or energy efficiency metrics for GPT-5.3-Codex-Spark.

As the preview phase continues, OpenAI has indicated that future updates will include support for JetBrains IDEs, cloud-based collaborative coding sessions, and integration with CI/CD pipelines. The tool’s potential to reshape software engineering workflows is undeniable — but its long-term impact will depend on accessibility, reliability, and ethical safeguards as it moves from elite preview to mainstream adoption.