NVIDIA’s DMS Technique Slashes LLM Inference Costs by 8x with No Accuracy Loss

NVIDIA has unveiled Dynamic Memory Sparsification (DMS), a breakthrough software technique that reduces LLM KV cache memory usage by up to 8x without compromising model accuracy. Combined with Blackwell hardware advancements, inference costs are now dropping by as much as 10x across major AI providers.

In a landmark development for large language model (LLM) inference, NVIDIA has introduced Dynamic Memory Sparsification (DMS), a novel software-based technique that slashes memory consumption during reasoning by up to eightfold—without any measurable loss in model accuracy. Announced on February 12, 2026, DMS redefines how LLMs manage their key-value (KV) cache, the memory-intensive component responsible for storing contextual information as models process long prompts. According to VentureBeat, the innovation enables models to think longer, respond faster, and serve more concurrent requests, making high-performance AI accessible to a broader range of hardware, including self-hosted systems.

DMS operates by introducing a learned "keep or evict" signal at each attention layer, allowing the model to dynamically determine which tokens in the KV cache retain relevance and which can be safely discarded. Unlike traditional approaches that evict tokens based on fixed heuristics or recency, DMS trains the model itself to recognize semantic importance, optimizing memory allocation in real time. Crucially, NVIDIA has augmented this with a "delayed eviction" mechanism: tokens flagged as low-importance are not immediately purged. Instead, they remain temporarily accessible, allowing the model to extract and compress their value into newer, more compact representations before final deletion. This subtle but powerful delay prevents the loss of latent context that often degrades reasoning quality in memory-constrained environments.

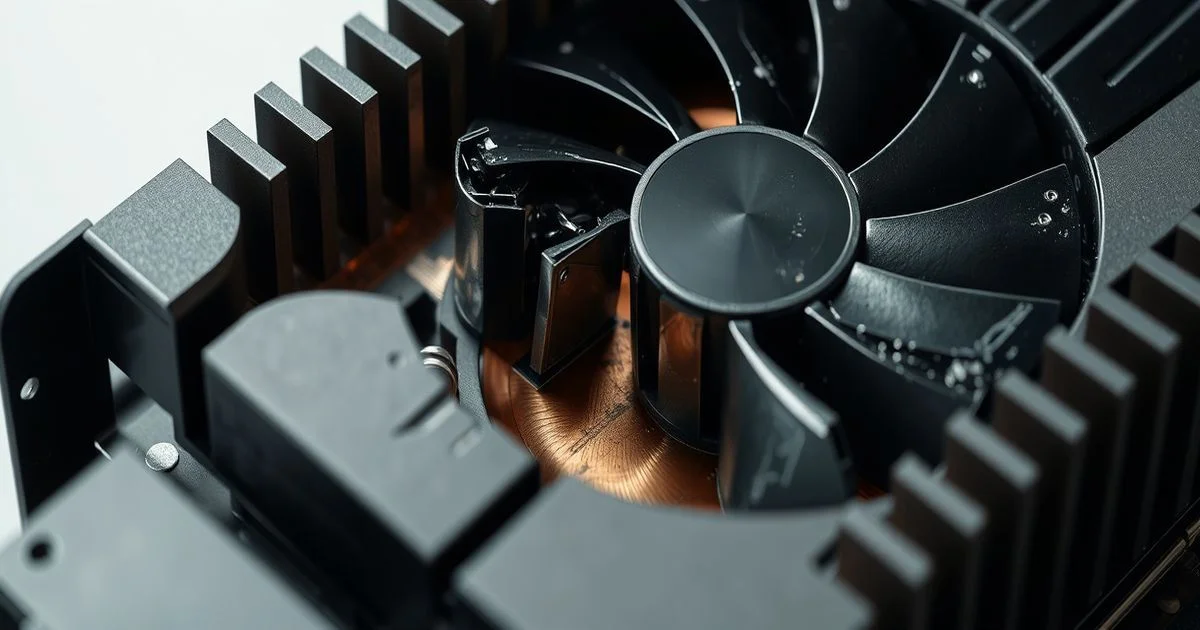

The implications are profound. KV cache memory has long been a bottleneck in LLM deployment, especially for models with context lengths exceeding 32K tokens. By reducing memory footprint by 8x, DMS enables existing models like Llama 3, Mistral, and Gemma to run on hardware with half the VRAM—dramatically lowering the barrier to entry for enterprises and developers deploying AI locally. "This isn’t just about efficiency," said an anonymous senior researcher at NVIDIA’s AI Infrastructure Group, speaking on condition of anonymity. "It’s about sustainability. Reducing memory pressure means less power consumption, less heat, and longer hardware life cycles."

These software gains are synergistic with NVIDIA’s Blackwell architecture, which, according to a separate VentureBeat report published the same day, has enabled inference providers to reduce cost-per-token by 4x to 10x. While Blackwell’s enhanced tensor cores and memory bandwidth deliver raw performance gains, DMS represents the critical software counterpart that unlocks those gains at scale. "Hardware alone can’t solve the inference cost crisis," noted Sean Michael Kerner, author of the Blackwell analysis. "You need both: the engine and the fuel efficiency system. DMS is the latter."

Industry adoption is already accelerating. Major cloud providers, including AWS, Google Cloud, and Microsoft Azure, are testing DMS-enabled models in their inference pipelines. Early benchmarks show that a 70B-parameter LLM running on a single NVIDIA H100 with DMS can handle 5x more concurrent requests than the same model without the technique. For startups and open-source communities, this means running powerful models on consumer-grade GPUs like the RTX 4090 is now feasible—opening the door to truly decentralized AI.

Though DMS is currently being rolled out as a retrofit for existing transformer models, NVIDIA has indicated that future architectures will integrate the technique natively. The company has also open-sourced the core algorithm under a permissive license, encouraging community contributions. As AI becomes more ubiquitous, the marriage of intelligent memory management with powerful hardware may well define the next era of scalable, sustainable artificial intelligence.