New AI Coding Models Devstral and Qwen3 Deliver Power and Portability for Every Developer

ByteShape has released two groundbreaking open-source coding models—Devstral-Small-2-24B and Qwen3-Coder-30B—designed to optimize performance across hardware from high-end GPUs to Raspberry Pi 5. Developers now have a clear choice: raw capability on powerful systems or seamless deployment on resource-constrained devices.

Devstral and Qwen3 Coder: A Dual-Strategy Approach to On-Device AI Coding

In a significant development for the local AI and open-source community, ByteShape has unveiled two new instruct-tuned coding models that redefine the boundaries of on-device AI programming. The Devstral-Small-2-24B-Instruct-2512 and Qwen3-Coder-30B-A3B-Instruct, both available in GGUF format for llama.cpp compatibility, offer developers a strategic choice: unparalleled performance on high-end hardware or unprecedented efficiency on low-power devices like the Raspberry Pi 5.

According to the original release on r/LocalLLaMA, Devstral-Small-2-24B is engineered for NVIDIA RTX 40 and 50 series GPUs, leveraging ShapeLearn optimization to avoid the notorious quality cliff observed around 2.30 bits per weight (bpw) in other models. This means that on capable systems, Devstral delivers superior code generation accuracy, context retention, and reasoning depth—making it ideal for professional software engineers running complex local LLM workflows.

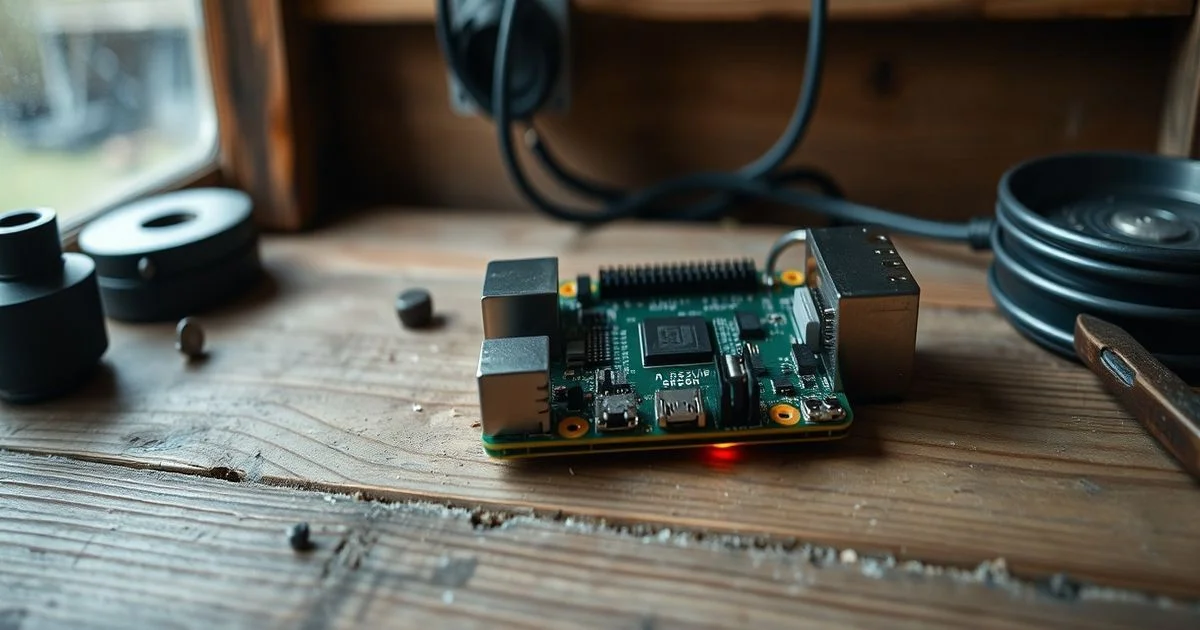

Conversely, Qwen3-Coder-30B is positioned as the universal solution. Despite its larger 30-billion parameter size, the model has been meticulously quantized and optimized to run efficiently on minimal hardware. On a Raspberry Pi 5 with 16GB of RAM, it achieves approximately 9 tokens per second (TPS) while maintaining around 90% of BF16 precision—a feat described by the developer as "worthy of a medal" for users who rely on single-board computers for daily coding tasks. This level of performance on such constrained hardware was previously thought unattainable for models of this scale.

The distinction between the two models is not merely about size but about design philosophy. Devstral uses a dense architecture with a larger key-value (KV) cache, which enhances its ability to handle long-context coding tasks but demands more VRAM and computational throughput. Qwen3-Coder, while also large, employs a more efficient attention mechanism and quantization strategy that sacrifices marginal peak performance for broad accessibility. As the Reddit post notes, the decision between them hinges on one question: does your context window fit and is your TPS acceptable? If yes, choose Devstral. If not, Qwen3-Coder is the pragmatic fallback.

Both models are distributed via Hugging Face in GGUF format, ensuring compatibility with popular local inference engines like llama.cpp, Ollama, and Text Generation WebUI. Notably, the Qwen3-Coder GGUFs include a custom prompt template that supports parallel tool calling—a feature critical for advanced AI-assisted development environments. This template has been tested across multiple llama.cpp backends and is being offered for community feedback to ensure robustness in real-world coding workflows.

The release underscores a growing trend in the open-source AI space: specialization through optimization. Rather than chasing ever-larger models, companies like ByteShape are focusing on tailored performance profiles. This approach democratizes access to state-of-the-art coding assistants, whether you’re a researcher with an RTX 5090 or a hobbyist running AI tools on a $60 Raspberry Pi.

For developers, this means a new era of choice. No longer must one compromise between capability and portability. With Devstral and Qwen3-Coder, the future of local AI coding is not a single model—it’s a spectrum, carefully calibrated for every desk, lab, and backpack.

Resources:

Devstral GGUF Models

Qwen3-Coder GGUF Models

ByteShape Technical Blog