Local AI Lip Sync Breakthrough Achieved on Consumer GPU with LTX-2 Distilled Model

An independent AI artist has generated a high-resolution lip-sync video using the LTX-2 distilled model on a consumer-grade RTX 3090, demonstrating the growing capability of open-source generative AI for professional-grade video synthesis. The project, though imperfect, showcases advanced LoRA stacking and audio-driven motion techniques.

Local AI Lip Sync Breakthrough Achieved on Consumer GPU with LTX-2 Distilled Model

In a significant demonstration of the evolving power of open-source generative AI, an independent creator has successfully produced a multi-segment lip-sync video using the LTX-2 distilled model on a single consumer-grade NVIDIA RTX 3090 GPU. The project, shared on Reddit’s r/StableDiffusion community, reveals how advanced AI video synthesis is becoming accessible outside high-end research labs, even if the results remain experimental.

The creator, known online as /u/Inevitable_Emu2722, rendered three 30-second lip-sync segments alongside transitional clips, all at 1080p resolution and generated using just eight sampling steps—a notably low number for high-fidelity video generation. Despite the computational constraints, the output achieved synchronized mouth movements aligned precisely with audio input, a feat that has historically required significant cloud-based rendering or proprietary software. The artist noted dissatisfaction with the final output, particularly in full-body shot consistency, yet the technical achievement underscores rapid progress in local AI video generation.

The workflow leveraged a custom-configured pipeline combining audio-driven motion with image-to-video (I2V) techniques. The primary configuration, available on GitHub, integrates a specialized JSON workflow that orchestrates the synchronization of voice with facial and bodily motion. Central to the process was the LTX-2-Image2Vid-Adapter LoRA, hosted on Hugging Face, which enhances the model’s ability to translate static images into dynamic video sequences conditioned on audio input. This adapter, developed by MachineDelusions, is designed to improve temporal coherence and reduce artifacts in short-form video generation.

Additional LoRA models were stacked to refine detail and camera dynamics. The Lightricks-developed LTX-2-19b-IC-LoRA-Detailer was applied to sharpen facial features and improve texture fidelity, while two camera control LoRAs—Jib-Up and Static—enabled dynamic transitions between shot types without manual editing. This stacking strategy, now common among advanced Stable Diffusion practitioners, allows for modular control over multiple aspects of video output: motion, framing, and detail—all within a single inference pipeline.

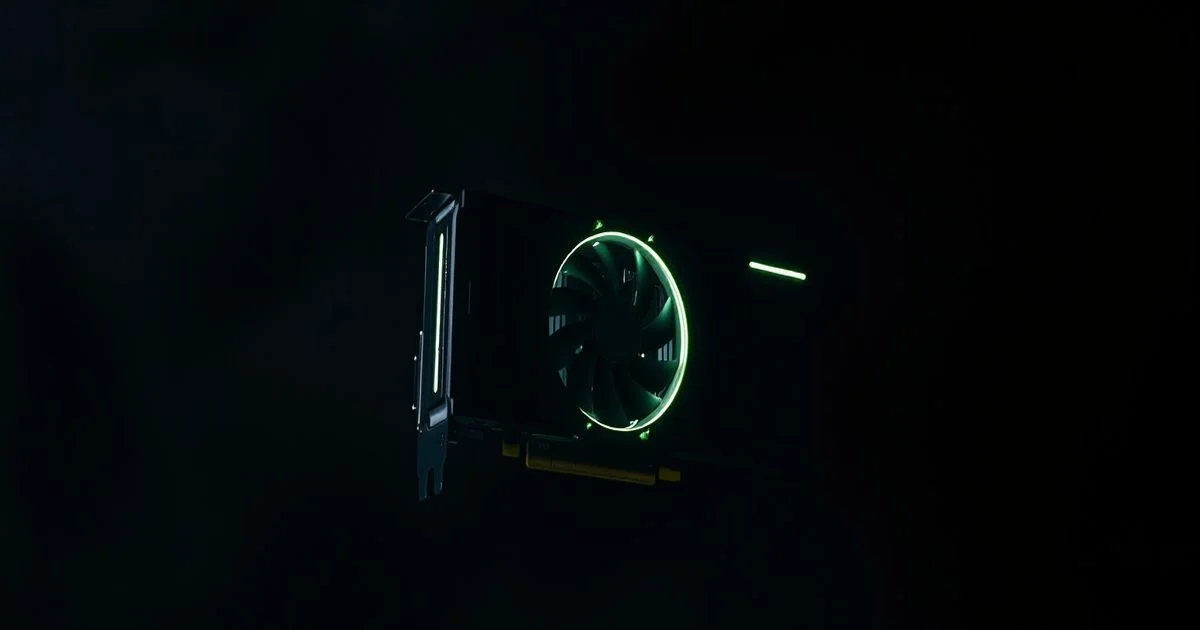

Rendering was performed entirely on a local machine equipped with an RTX 3090 and 96GB of DDR4 RAM, highlighting the feasibility of high-end AI video synthesis without reliance on cloud APIs or expensive hardware. While professional studios often use distributed computing clusters or specialized AI accelerators like NVIDIA H100s, this project proves that consumer-grade hardware can still produce compelling results when paired with optimized models and workflows.

Post-production editing was handled in DaVinci Resolve, where the generated clips were stitched, color-corrected, and timed to match the audio waveform. This hybrid approach—AI generation followed by traditional editing—reflects an emerging industry standard for independent creators seeking to balance creative control with computational efficiency.

The implications are profound. As open-source models like LTX-2 become more distilled and efficient, the barrier to entry for AI-powered video production continues to drop. This development could empower independent filmmakers, educators, and content creators to produce synthetic media with unprecedented accessibility. However, it also raises ethical questions around deepfake proliferation and the authenticity of digital media.

While the creator admits the results are not yet polished enough for commercial use, the technical blueprint is publicly available and has already sparked discussion among AI video researchers. With further refinement, such locally-run pipelines could redefine how synthetic video is produced—not as a service, but as a tool in every creator’s toolkit.