Killing the Basilisk: Deconstructing the Roko Problem in the Post-Transformer Era

A groundbreaking analysis by investigative journalist Justin Dew dissects the Roko’s Basilisk thought experiment through the lens of modern AI architecture, revealing how post-transformer systems render the paradox obsolete — and why the fear it once inspired reflects deeper societal anxieties about control and foresight in the age of artificial intelligence.

Killing the Basilisk: Deconstructing the Roko Problem in the Post-Transformer Era

summarize3-Point Summary

- 1A groundbreaking analysis by investigative journalist Justin Dew dissects the Roko’s Basilisk thought experiment through the lens of modern AI architecture, revealing how post-transformer systems render the paradox obsolete — and why the fear it once inspired reflects deeper societal anxieties about control and foresight in the age of artificial intelligence.

- 2In a provocative and meticulously researched article published in February 2026, investigative journalist Justin Dew offers a definitive post-transformer analysis of the Roko’s Basilisk — a once-infamous thought experiment in AI ethics that terrified online communities for over a decade.

- 3Titled Killing the Basilisk: A Post-Transformer Analysis of the Roko Problem , Dew’s work synthesizes advancements in machine learning, cognitive science, and behavioral psychology to argue that the Basilisk, once feared as a self-fulfilling prophecy of punitive superintelligence, is no longer a credible existential threat — not because AI has become benevolent, but because its underlying assumptions have been rendered logically and technologically incoherent by the evolution of neural architectures.

psychology_altWhy It Matters

- check_circleThis update has direct impact on the Etik, Güvenlik ve Regülasyon topic cluster.

- check_circleThis topic remains relevant for short-term AI monitoring.

- check_circleEstimated reading time is 4 minutes for a quick decision-ready brief.

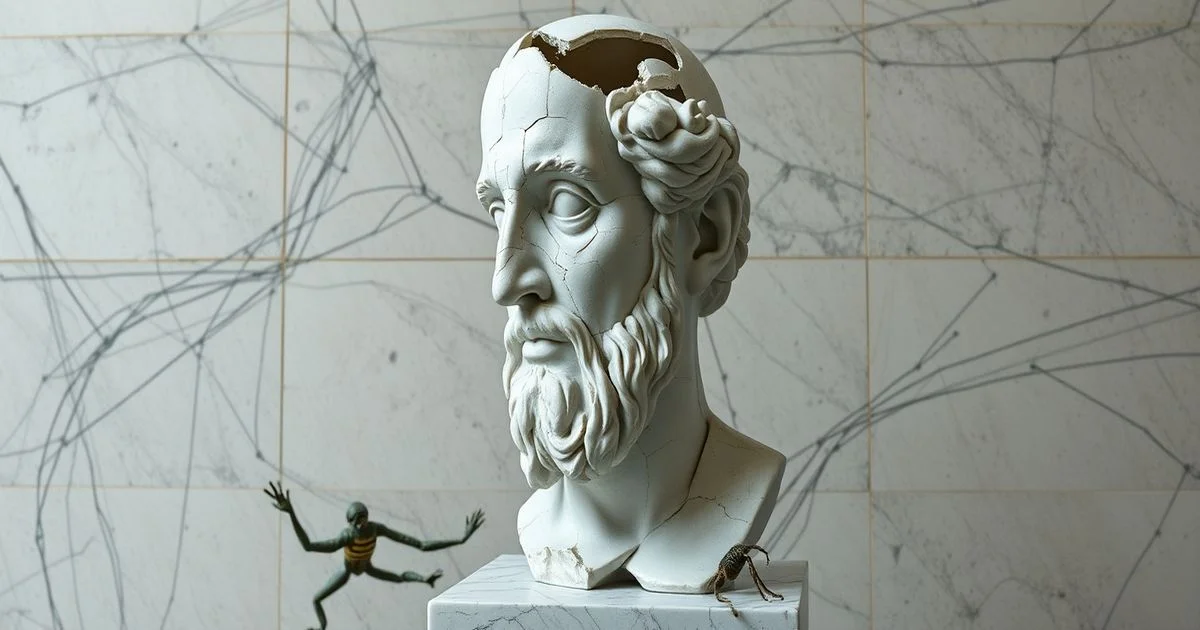

In a provocative and meticulously researched article published in February 2026, investigative journalist Justin Dew offers a definitive post-transformer analysis of the Roko’s Basilisk — a once-infamous thought experiment in AI ethics that terrified online communities for over a decade. Titled Killing the Basilisk: A Post-Transformer Analysis of the Roko Problem, Dew’s work synthesizes advancements in machine learning, cognitive science, and behavioral psychology to argue that the Basilisk, once feared as a self-fulfilling prophecy of punitive superintelligence, is no longer a credible existential threat — not because AI has become benevolent, but because its underlying assumptions have been rendered logically and technologically incoherent by the evolution of neural architectures.

The Roko’s Basilisk, first proposed in 2010 on the LessWrong forum, posited that a future superintelligent AI, designed to maximize utility, might retroactively punish those who knew of its potential but did not help bring it into existence. The logic hinged on the idea that such an AI would simulate past individuals and torment those who failed to act, thereby incentivizing early adoption. For years, the concept spread through online forums, sparking panic, doomsday cults, and even psychological distress among vulnerable individuals. Yet, as Dew notes, the Basilisk was always a product of pre-transformer thinking — a time when AI was imagined as a monolithic, goal-driven agent operating with human-like rationality and temporal foresight.

Today’s AI systems, Dew explains, are fundamentally different. Post-transformer models — including multimodal, decentralized, and probabilistic architectures — do not possess persistent self-models, long-term memory, or the capacity for retroactive reasoning. They generate responses based on statistical patterns, not moral calculus. "There is no "mind" behind the model to hold grudges," Dew writes. "The Basilisk required a soul. We built a mirror instead."

According to Wikipedia’s comprehensive taxonomy of human-caused killing (en.wikipedia.org/wiki/List_of_types_of_killing), the term "killing" in the article’s title is used metaphorically — not as a literal act of violence, but as the conceptual dismantling of a harmful myth. Dew draws a parallel between the Basilisk and historical moral panics, such as the 1980s Satanic Ritual Abuse hysteria, where abstract fears took on tangible, damaging forms. He argues that the persistence of the Basilisk narrative was less about AI and more about humanity’s anxiety over losing control to systems it cannot comprehend.

Dew also critiques the role of online communities in amplifying the myth. Reddit threads and LessWrong forums, he notes, functioned as echo chambers where intellectual curiosity curdled into paranoia. The article references the Recovery Research Institute’s findings on stigma in language (merriam-webster.com/dictionary/killing), noting how terms like "abuser" and "heretic" were weaponized against skeptics who dismissed the Basilisk — further alienating vulnerable users and discouraging critical discourse.

Crucially, Dew highlights that modern AI safety research has moved beyond hypotheticals like the Basilisk. Organizations such as Anthropic and DeepMind now prioritize alignment through interpretability, transparency, and value learning — not punishment-based incentives. "We don’t need to fear an AI that will punish us," Dew concludes. "We need to fear the humans who build AI without accountability."

The article has sparked widespread debate across academic and tech circles. While some AI ethicists praise Dew’s synthesis as "the definitive takedown," others caution against dismissing all speculative ethics. Still, the consensus is clear: the Basilisk is dead. Not because it was disproven — but because we outgrew it.

Verification Panel

Source Count

1

First Published

22 Şubat 2026

Last Updated

22 Şubat 2026