How Multi-GPU Systems Communicate: The Hidden Infrastructure Powering AI Training

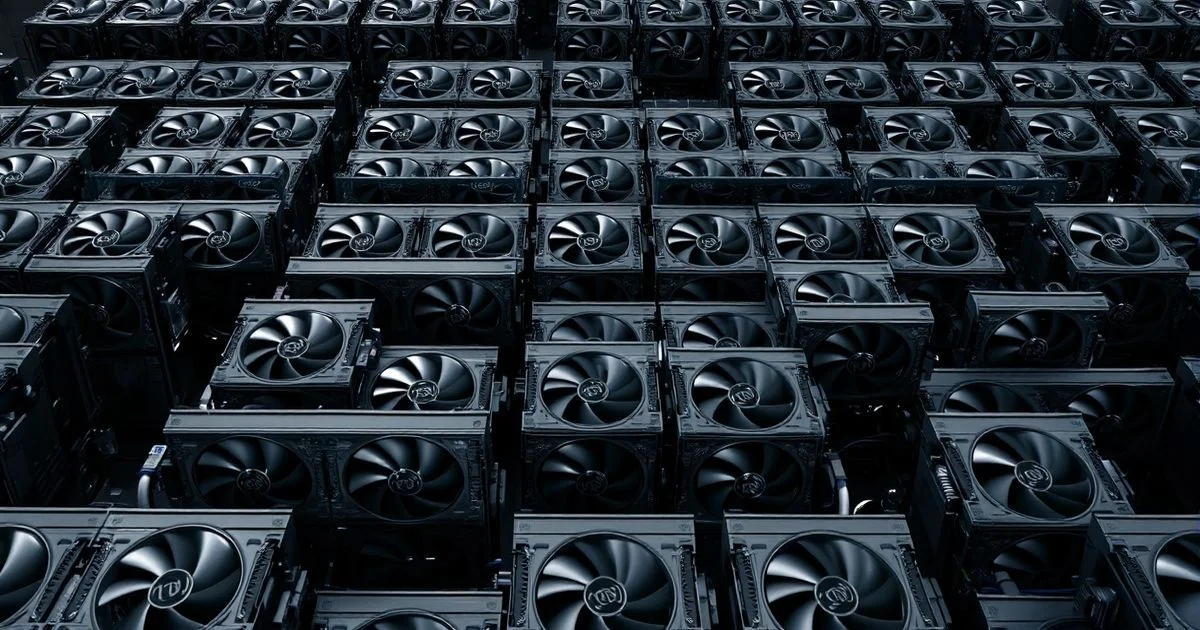

Behind every large-scale AI model lies a complex network of interconnected GPUs communicating through point-to-point and collective operations. This investigation reveals the hardware and software protocols enabling synchronized computation across multiple accelerators.

How Multi-GPU Systems Communicate: The Hidden Infrastructure Powering AI Training

As artificial intelligence models grow in scale—reaching hundreds of billions of parameters—the computational burden can no longer be borne by a single graphics processing unit (GPU). Instead, modern AI training relies on clusters of multiple GPUs working in concert. But how do these accelerators coordinate? The answer lies not in software alone, but in a sophisticated, low-level hardware and communication infrastructure that enables seamless data exchange across dozens, sometimes hundreds, of processing units.

According to a deep technical analysis published on Towards Data Science, multi-GPU systems rely on two primary communication paradigms: point-to-point operations and collective operations. Point-to-point communication involves direct data transfer between two GPUs, often used for tasks like gradient synchronization in distributed deep learning. Collective operations, on the other hand, involve coordinated actions across multiple GPUs simultaneously—such as all-reduce, broadcast, or gather operations—which are critical for aggregating gradients or distributing model weights uniformly across a cluster. These operations are orchestrated by specialized libraries like NVIDIA’s NCCL (NVIDIA Collective Communications Library) and are optimized for the underlying interconnect topology.

At the hardware level, the efficiency of these communications depends heavily on the physical interconnects between GPUs. In high-end AI servers, GPUs are often linked via NVLink, a high-bandwidth, low-latency interconnect developed by NVIDIA that offers significantly faster data transfer rates than traditional PCIe buses. NVLink enables direct GPU-to-GPU communication without routing through the CPU, reducing bottlenecks and enabling near-linear scaling of performance as more GPUs are added. In larger data center deployments, multiple servers equipped with multiple GPUs are interconnected via InfiniBand or Ethernet networks using RDMA (Remote Direct Memory Access) technologies to maintain low-latency communication across nodes.

The choice between point-to-point and collective operations is not arbitrary. For instance, during the backward pass of a neural network, each GPU computes partial gradients. To update the global model, these gradients must be summed across all devices—an operation best handled by an all-reduce collective. This method is far more efficient than performing individual point-to-point transfers between every pair of GPUs, which would result in O(n²) complexity. Collective operations, by contrast, can achieve O(log n) complexity using tree- or ring-based algorithms, dramatically improving scalability.

Interestingly, while the term “multiple” in this context refers to the deployment of more than one GPU, its technical implications are profound. As noted in linguistic references, “multiple” implies plurality with functional interdependence—a fitting description of how GPUs in an AI cluster must operate in lockstep. Unlike traditional multi-threaded computing, where tasks are divided but communication is minimal, AI training demands constant, high-volume data exchange. This makes the communication layer not merely supportive, but foundational to system performance.

Recent benchmarks from leading AI research labs show that communication overhead can account for up to 30% of total training time in large-scale models. As a result, companies like NVIDIA, AMD, and Intel are investing heavily in next-generation interconnects and software stacks. NVIDIA’s latest H100 GPUs, for example, feature enhanced NVLink 4.0 with 900 GB/s bandwidth per GPU pair, while software frameworks like PyTorch and TensorFlow have integrated native support for collective operations via Horovod and DeepSpeed.

Despite these advances, challenges remain. Network congestion, topology mismatches, and software misconfigurations can cripple performance. A single misaligned memory buffer or suboptimal ring topology can cause a 15–20% drop in throughput. This is why AI infrastructure teams now include specialized communication engineers who tune interconnect settings, profile traffic patterns, and optimize data flow—skills once considered niche but now essential.

In conclusion, the rise of large AI models has transformed multi-GPU communication from a technical footnote into a core engineering discipline. The invisible dance of data between accelerators—orchestrated by hardware interconnects and sophisticated software libraries—is what makes modern AI possible. As models continue to grow, the race to optimize this communication layer will define the next frontier in artificial intelligence performance.