From Crypto Mining to AI Powerhouse: 800 RX 580s Repurposed for Low-Cost Visual AI

A grassroots tech team has transformed 800 retired Ethereum mining GPUs into a massively parallel AI inference cluster, achieving document and video analysis at 24x lower cost than cloud APIs. Leveraging Vulkan and custom-built Linux stacks, the project redefines affordable AI at scale.

From Crypto Mining to AI Powerhouse: 800 RX 580s Repurposed for Low-Cost Visual AI

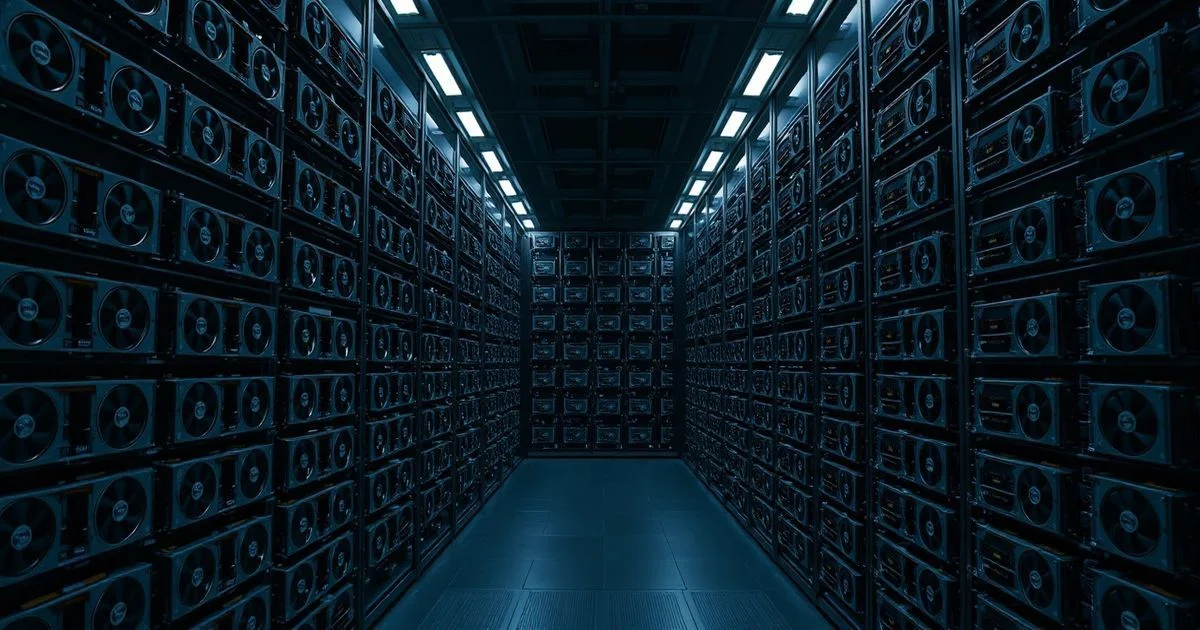

In a remarkable feat of hardware repurposing, a private tech collective has converted an abandoned Ethereum mining farm into a high-throughput artificial intelligence cluster using 800 AMD Radeon RX 580 graphics cards. Once discarded as obsolete after the collapse of crypto mining profitability, these GPUs—originally purchased for their raw hash rate—are now delivering state-of-the-art visual language processing at a fraction of the cost of commercial cloud alternatives.

The project, spearheaded by a team of engineers and AI researchers, abandoned traditional parallel AI architectures due to the RX 580s’ severe PCIe bandwidth limitations and lack of ROCm multi-GPU support. Instead, they adopted a radical decentralization strategy: each GPU operates as an independent inference worker, handling one document page or video frame at a time. This "embarrassingly parallel" model enables the cluster to process 800 simultaneous tasks with near-linear scalability, turning legacy hardware into a cost-efficient AI powerhouse.

According to the team’s detailed technical documentation, the primary challenge was running modern large language models on aging Polaris architecture (GFX803) GPUs under Linux. ROCm, AMD’s official AI stack, failed to recognize more than one GPU per system due to PCIe atomics incompatibility. The solution? A custom-built Linux stack from the ground up: compiling Mesa 24.2.0 with RADV Vulkan drivers, libdrm 2.4.121, Wayland 1.22, and the Vulkan SDK 1.3.283—all from source. This painstaking process enabled the team to run llama.cpp with Vulkan backend support, bypassing the limitations of AMD’s proprietary software ecosystem.

Crucially, the Celeron G3950 CPUs powering each rig lack AVX2 instructions, forcing the team to disable all advanced CPU optimizations. The build flags were meticulously tuned: -DGGML_NATIVE=OFF -DGGML_AVX=OFF -DGGML_AVX2=OFF -DGGML_FMA=OFF -DGGML_F16C=OFF -DGGML_SSE42=ON with CXXFLAGS="-march=x86-64 -mtune=generic" to ensure binary stability across all 800 nodes.

The chosen model, Qwen3-VL-8B-instruct in q4 quantization, fits comfortably within the 8GB VRAM of the RX 580, supporting up to 6,000 context tokens. The cluster now processes high-resolution scanned documents—medical textbooks, legal depositions, and technical manuals—with remarkable accuracy. In a benchmark using a 966-page ophthalmology text riddled with cursive annotations, tables, and diagrams, the system achieved near-human-level text extraction quality, costing just $0.50 per run in electricity, compared to $12 via OpenAI’s API.

The system employs a tiered quality escalation model: q4 (175 DPI) for standard pages, q8 (200 DPI) for complex layouts, bf16 (250 DPI) for high-fidelity needs, and bf16 across six GPUs (300 DPI) for archival-grade scans. Only 15% of pages require escalation beyond tier one, making the system both efficient and economical.

Currently in development is a parallel video frame analysis pipeline. A 60-second video at 13fps yields 780 frames, each assigned to a dedicated GPU for visual description. The team is now building temporal clustering algorithms to track entities and events across frames—a precursor to automated surveillance, quality assurance, and content moderation systems. If successful, this could disrupt the $20B+ video analytics market by offering a $0.01-per-frame inference cost.

With hardware acquisition costs estimated at $80 per 8GB of VRAM—versus $365 per GB for an H100—the project demonstrates how legacy infrastructure, when creatively rearchitected, can outperform cutting-edge AI hardware on cost-sensitive tasks. The team has open-sourced their Docker build scripts and invites collaboration, emphasizing ethical use: "We’re not building a black box—we’re building a public utility."

As enterprise AI costs soar and climate-conscious computing gains traction, this grassroots initiative offers a blueprint for sustainable, decentralized AI infrastructure—proving that innovation doesn’t always require the latest silicon, but often, the right mindset.