Can Local AI Tools Match Seedance 2.0’s Cinematic Video Generation?

As users seek affordable, locally-run alternatives to the cloud-based Seedance 2.0 platform, open-source AI video generators are emerging as viable contenders. Experts and enthusiasts debate whether these tools can replicate its cinematic quality without subscription fees.

Can Local AI Tools Match Seedance 2.0’s Cinematic Video Generation?

Amid growing interest in AI-generated cinematic video, users are increasingly seeking alternatives to Seedance 2.0 — a cloud-based platform praised for its ability to render Hollywood-quality scenes from simple text prompts. A recent Reddit thread from user Lichnaught sparked widespread discussion when they asked whether any local, offline alternatives could match Seedance 2.0’s visual fidelity without requiring a paid subscription. The query reflects a broader trend: creators are eager to harness advanced AI video tools while maintaining privacy, reducing costs, and avoiding reliance on proprietary cloud services.

While Seedance 2.0 remains a proprietary system with no public API or local deployment option, several open-source frameworks are rapidly closing the gap in quality and accessibility. Tools like VideoCrafter, AnimateDiff, and SVD (Stable Video Diffusion) now enable users with high-end GPUs to generate multi-second video sequences from text or image prompts directly on their personal computers. These models, built on top of Stable Diffusion architecture, have been fine-tuned for temporal coherence and motion realism — key features once exclusive to commercial platforms like Seedance 2.0.

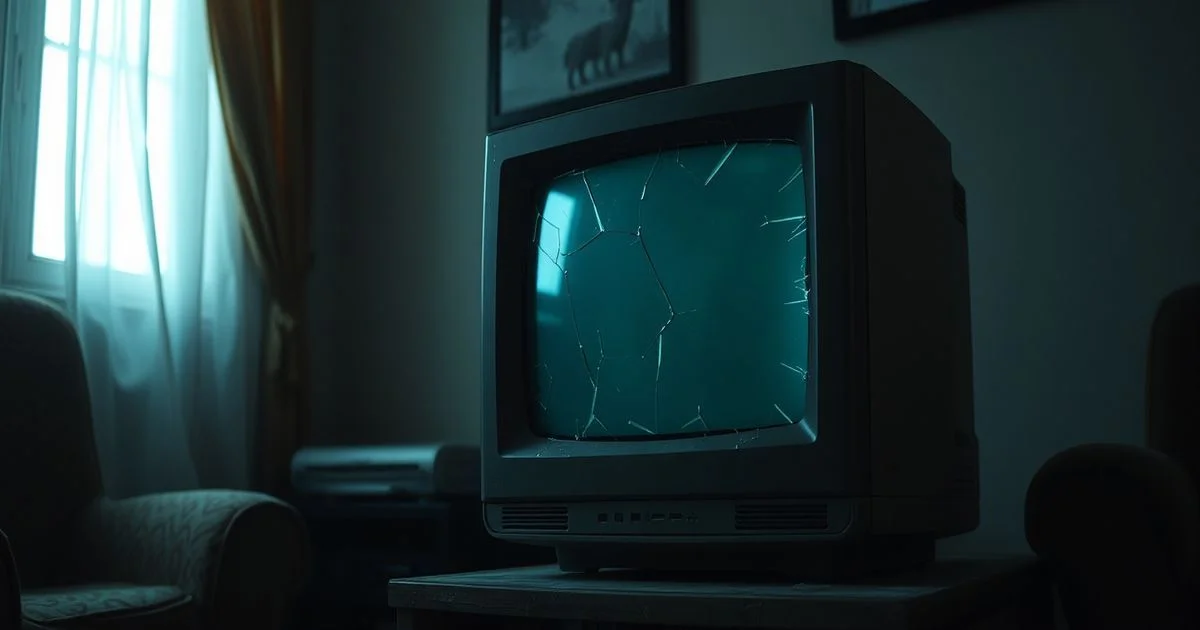

According to community reports on forums such as Reddit’s r/StableDiffusion and Hugging Face, users with NVIDIA RTX 4090 or AMD Radeon RX 7900 XTX systems are achieving results that rival early Seedance 2.0 demos. One user, using VideoCrafter v2 with a 512x512 resolution and 16-frame output, generated a 3-second clip of a character walking through a dystopian cityscape — a scene strikingly similar to those circulating online under the Seedance 2.0 banner. While these outputs lack the polished lighting and dynamic camera motion of Seedance’s proprietary pipeline, they require no subscription, no data upload, and no third-party cloud processing.

However, limitations persist. Local models demand significant computational resources: a single 4-second video at 24fps can consume over 12GB of VRAM and take 10–20 minutes to render. Moreover, temporal consistency — the ability to maintain object shape and motion continuity across frames — remains a challenge. Seedance 2.0 reportedly uses proprietary motion interpolation and physics-based rendering techniques that are not yet replicated in open-source equivalents.

Interestingly, the debate around Seedance 2.0 has taken on cultural dimensions. A viral AI-generated finale for Stranger Things, depicting Eleven, Will, and Kali battling Vecna, was widely attributed to Seedance 2.0 on social media — though no official source confirmed its origin. This incident underscores how the platform’s name has become synonymous with high-fidelity AI video, regardless of the actual tool used. As noted in a recent article on MSN, netizens are now actively debating whether Seedance 2.0’s future lies in democratizing access or further locking users into a paywall ecosystem.

For hobbyists and indie creators, the path forward is clear: local AI video generation is no longer science fiction. With tools like ComfyUI, which allows modular workflow building, users can now chain together image-to-video, motion control, and upscaling models into a fully local pipeline. While Seedance 2.0 remains the gold standard for ease of use and polish, its exclusivity is being challenged by a vibrant open-source community.

As AI video technology evolves, the line between proprietary platforms and open tools continues to blur. For now, those unwilling to pay for Seedance 2.0 need not abandon their creative ambitions. With patience, technical know-how, and a powerful GPU, cinematic AI video is within reach — right on their own desktop.