Anthropic Announces Billion-Dollar Data Center Initiative and Poaches Google AI Executives

Anthropic is investing hundreds of billions of dollars in proprietary data centers to support its next-generation AI models, while aggressively recruiting senior AI infrastructure leaders from Google. The move signals a strategic pivot toward vertical integration in the competitive AI infrastructure race.

Anthropic Announces Billion-Dollar Data Center Initiative and Poaches Google AI Executives

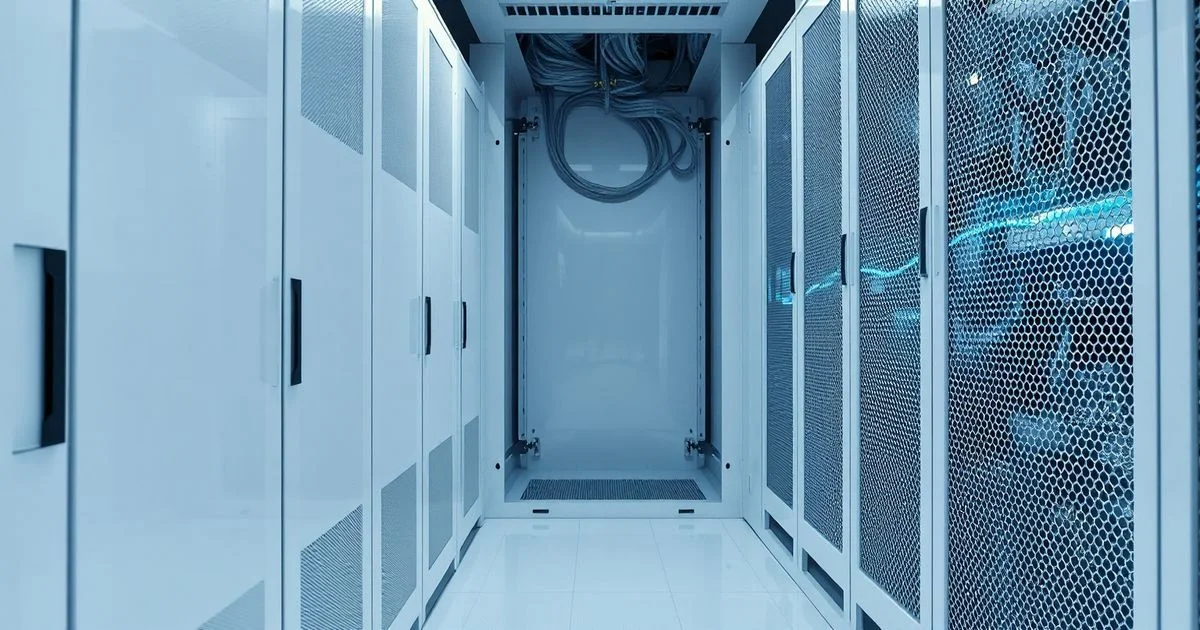

In a landmark strategic shift, AI research firm Anthropic has unveiled plans to invest hundreds of billions of dollars in building its own proprietary data center infrastructure, marking one of the most ambitious vertical integration moves in the history of artificial intelligence. Simultaneously, the company is actively recruiting top-tier engineering and infrastructure executives from Google’s AI division, signaling a deepening rivalry in the race to control the foundational layers of next-generation AI systems.

According to internal company announcements and industry sources, Anthropic’s new infrastructure initiative aims to reduce reliance on third-party cloud providers like Amazon Web Services and Microsoft Azure. The company’s leadership believes that proprietary compute capacity is essential to maintain control over model training cycles, ensure data sovereignty, and accelerate innovation for its Claude series of large language models. The investment, which could exceed $300 billion over the next five years, would position Anthropic among the top global spenders on AI infrastructure — rivaling even the largest tech conglomerates.

Central to this expansion is a targeted talent acquisition campaign. Multiple senior engineering leaders who previously oversaw Google’s TPU (Tensor Processing Unit) deployments and global data center operations have been approached with offers to lead Anthropic’s new infrastructure division. While formal announcements are pending, sources familiar with the hiring process confirm that at least five former Google directors and vice presidents in AI infrastructure have accepted roles at Anthropic since late 2025. This talent migration follows a broader trend in the AI industry, where companies are increasingly competing not just for algorithmic breakthroughs, but for the physical and logistical backbone that powers them.

Anthropic’s recent release of Claude Opus 4.6 on February 5, 2026, underscores the urgency behind this infrastructure push. The model, which features a 1 million token context window and enhanced coding and agent capabilities, demands unprecedented computational resources for training and inference. According to Anthropic’s official news release, Opus 4.6 represents “the most capable model to date,” with improvements in reliability, precision, and enterprise workflow integration. These gains, the company notes, are only possible with dedicated, low-latency compute environments that are not constrained by shared cloud architectures.

As part of its broader strategy, Anthropic has also expanded its educational initiatives through Anthropic Academy, offering enterprise-focused courses on Claude Code, API development, and Model Context Protocol. These resources are designed to onboard developers and corporate clients onto Anthropic’s ecosystem, creating a feedback loop between product usage and infrastructure demands. The company’s commitment to transparency and responsible scaling — outlined in its updated Responsible Scaling Policy — further positions it as a trusted partner for regulated industries seeking to deploy AI without compromising security or compliance.

Industry analysts view Anthropic’s dual strategy — massive capital investment in hardware and strategic talent acquisition — as a direct challenge to the dominance of Big Tech in AI infrastructure. While Google, Microsoft, and Amazon continue to dominate cloud AI services, Anthropic’s move suggests a growing belief that true AI leadership requires ownership of the entire stack: from silicon to safety protocols. The company’s founder team, including former OpenAI researchers, has long emphasized the need for independent infrastructure to avoid conflicts of interest and ensure long-term alignment with public interest.

As global demand for AI compute surges, Anthropic’s bold bet could redefine the competitive landscape. If successful, the company may emerge not just as a leading AI model developer, but as a vertically integrated AI infrastructure provider — potentially reshaping how future AI systems are built, deployed, and governed.