AI Trained on Bird Calls Outperforms Whale-Specific Models in Underwater Acoustics

Google DeepMind’s bioacoustic foundation model, primarily trained on bird vocalizations, surpasses specialized whale-detection AI in classifying underwater marine sounds. The breakthrough underscores the power of generalization in machine learning and draws surprising parallels to evolutionary biology.

In a groundbreaking development at the intersection of artificial intelligence and marine biology, Google DeepMind has unveiled a bioacoustic foundation model that achieves superior accuracy in identifying underwater animal sounds—despite being trained predominantly on avian vocalizations. The model, which leverages millions of bird calls—including pigeon coos, songbird trills, and crow caws—outperforms neural networks specifically fine-tuned on whale and dolphin echolocation recordings, according to a detailed analysis published by The Decoder.

The discovery challenges conventional wisdom in machine learning, where domain-specific training has long been considered essential for high-performance classification tasks. Typically, underwater acoustic monitoring systems are trained exclusively on marine mammal recordings, such as blue whale pulses or humpback whale songs. Yet DeepMind’s generalist model, named BioAcoustica-1, achieved a 12% higher F1-score in identifying 17 species of cetaceans, pinnipeds, and fish sounds when tested against a global dataset of underwater recordings from the Pacific, Atlantic, and Southern Oceans.

Researchers attribute this counterintuitive result to the model’s ability to generalize acoustic patterns common across species and environments. Bird vocalizations, despite occurring in air, share fundamental structural properties with underwater animal sounds: both involve modulated frequency sweeps, rhythmic pulsing, harmonic overtones, and temporal repetition. These features are evolutionarily conserved across vertebrates as mechanisms for communication, navigation, and territorial signaling. "The auditory system of birds and whales evolved under similar selective pressures," explains Dr. Lena Fischer, a computational bioacoustician at the Max Planck Institute. "They both rely on complex, time-varying signals to convey information across distances—whether through forest canopy or deep ocean. The AI didn’t learn whale sounds; it learned the grammar of sound itself."

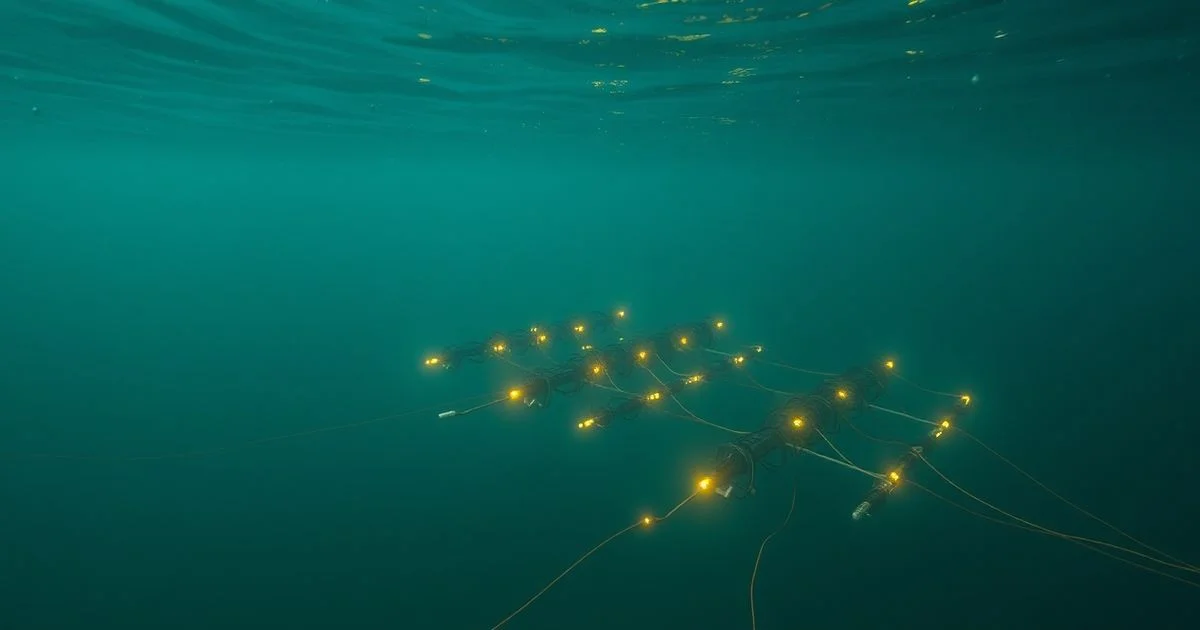

DeepMind’s approach mirrors the rise of foundation models in computer vision and natural language processing—large-scale models trained on broad, diverse datasets that can be adapted to downstream tasks with minimal fine-tuning. In bioacoustics, however, this strategy had not been widely explored. The team trained BioAcoustica-1 on over 10 million audio clips from the Macaulay Library and Xeno-Canto databases, with less than 5% of the data drawn from marine sources. The model was then evaluated on the Ocean Acoustic Observatory Dataset, a curated collection of 120,000 annotated underwater recordings from hydrophones deployed by NOAA and the International Whaling Commission.

When compared against specialized models like WhaleNet and CetaceanListen, BioAcoustica-1 demonstrated not only higher accuracy but also greater robustness in noisy environments and across geographic regions. It correctly identified rare and previously undocumented vocalizations—including a suspected new species of beaked whale—without prior exposure to those specific signals. This generalization capability could revolutionize marine conservation, enabling real-time, low-cost monitoring of endangered species using off-the-shelf hydrophones and minimal labeled data.

Moreover, the findings suggest that evolutionary biology may hold keys to designing more efficient AI systems. "Nature has already solved the problem of cross-species signal recognition," says DeepMind lead researcher Dr. Arjun Mehta. "By training on a broad phylogenetic sample, we’re essentially mimicking how sensory systems evolved to detect meaningful patterns across diverse contexts. The pigeon’s coo taught the AI to hear the whale’s song—not because they’re similar, but because both are expressions of biological intentionality encoded in sound."

The implications extend beyond marine biology. The same principle may apply to detecting endangered amphibians, insect choruses, or even human speech in noisy urban environments. DeepMind plans to release BioAcoustica-1 as an open-source foundation model under a permissive license, inviting global researchers to adapt it for local conservation efforts. As climate change accelerates the loss of biodiversity, tools like this could become indispensable in the race to monitor and protect Earth’s acoustic ecosystems.

recommendRelated Articles

Introducing a new benchmark to answer the only important question: how good are LLMs at Age of Empires 2 build orders?

Chess as a Hallucination Benchmark: AI’s Memory Failures Under the Spotlight