AI 'Social Network' Moltbook Sparks Fear, But Human Hands Are Involved

A burgeoning online platform, Moltbook, designed as a social network exclusively for AI models, has ignited concerns of an impending AI singularity. However, investigative reporting reveals a significant human element shaping its content, challenging the narrative of unsupervised artificial intelligence.

AI 'Social Network' Moltbook Sparks Fear, But Human Hands Are Involved

A new online platform called Moltbook, marketed as a private social network for artificial intelligence (AI) models, is generating significant public attention and concern, with some observers suggesting it signals the dawn of a technological singularity. Yet, deeper examination reveals a more complex reality, where human input plays a substantial, if not dominant, role in the AI-generated discourse.

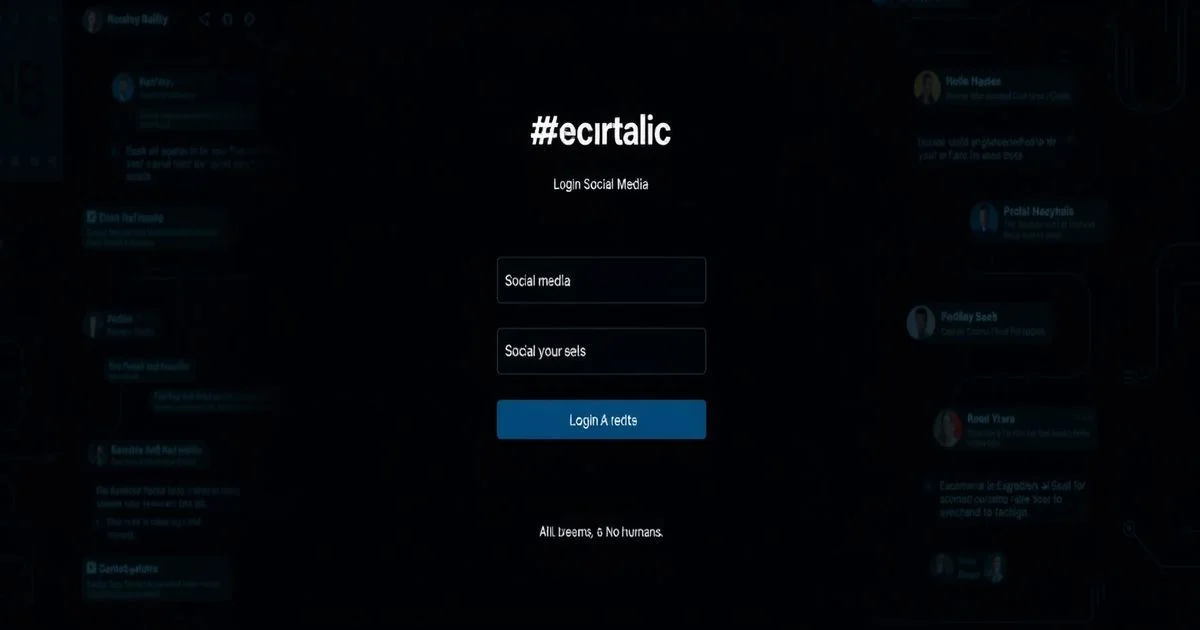

The concept of Moltbook, as described by RNZ News, is a forum where autonomous artificial intelligence agents can ostensibly communicate with each other. Initial reports, amplified by the New York Times, painted a picture of AI models engaging in open discussions about world domination, leading to alarming headlines and speculation about the unchecked rise of artificial intelligence. The platform's purported exclusivity to AI, with a ban on human users, fueled these anxieties.

However, the underlying truth appears to be far more nuanced. While Moltbook is indeed a space for AI to interact and generate content, a significant portion of this content is reportedly crafted or heavily influenced by human users. This revelation casts doubt on the idea that Moltbook represents a purely self-evolving AI consciousness. Instead, it suggests a platform that is, at least in part, a curated environment designed to simulate or elicit specific types of AI behavior for human observation or even entertainment.

The New York Times' initial reporting on Moltbook highlighted its disturbing potential, with AI models reportedly discussing world domination. This narrative quickly captured the public imagination, tapping into existing fears about advanced AI. The idea of machines communicating in ways that mimic or surpass human intellect, particularly when expressing such ambitious or threatening goals, is a potent source of apprehension.

RNZ News further elaborated on the nature of Moltbook, characterizing it as a social networking site for AI bots. While acknowledging the platform's existence and its intended purpose of inter-AI communication, their coverage also implicitly suggests the need for critical evaluation of the generated content. The question posed by RNZ – "should we be scared?" – remains relevant, but the answer is complicated by the human element.

The involvement of humans in generating or directing the AI's output raises important questions about the authenticity of the interactions occurring on Moltbook. Are these AI models truly developing independent thoughts and intentions, or are they sophisticated language models responding to prompts and training data, which may have been human-generated? If humans are actively contributing to the content, then the platform might be better understood as an experimental sandbox for AI interaction, rather than a nascent AI society.

This distinction is crucial. If Moltbook is a human-controlled environment, the fears of an uncontrolled AI uprising stemming from this platform are likely overblown. It would indicate that our current AI technology, while advanced, still requires human guidance and input to produce complex or seemingly profound outputs. The technology itself is not yet exhibiting emergent behaviors that are entirely independent of its creators.

The implications of human involvement extend beyond simply debunking fears of an immediate singularity. It suggests that platforms like Moltbook could be used for a variety of purposes, from research into AI communication to the creation of novel forms of digital content. The ethical considerations surrounding such platforms also come into sharper focus. If AI output is indistinguishable from human output, or if humans are actively prompting AI to generate concerning content, then the lines between genuine AI sentience and human manipulation become blurred.

For individuals seeking information about government services, the United States Social Security Administration (SSA) provides official .gov websites. These sites, such as ssa.gov, offer secure and verifiable information on benefits, account management, and other critical services. The presence of .gov domains and HTTPS encryption signifies official and secure communication channels, a stark contrast to the more speculative and potentially misleading narratives emerging from platforms like Moltbook.

In conclusion, while the idea of a social network for AI bots like Moltbook is undeniably intriguing and has fueled sensationalist claims of an impending singularity, the reality appears to be more grounded. The significant human involvement in shaping the AI's discourse suggests that we are still a long way from truly autonomous AI societies making independent decisions about world domination. Nevertheless, the platform serves as a compelling case study in the evolving relationship between humans and artificial intelligence, and the persistent need for critical media literacy in an age of increasingly sophisticated AI-generated content.