AI Enthusiast Deploys 120B Parameter Model on Dual RTX 5090, Sparks Community Interest

An anonymous developer has successfully deployed a 120-billion-parameter open-source LLM on a dual RTX 5090 system, achieving 128k context processing with CPU offloading. The feat, shared on Reddit’s r/LocalLLaMA, highlights the growing capability of consumer-grade hardware to run large-scale AI models.

AI Enthusiast Deploys 120B Parameter Model on Dual RTX 5090, Sparks Community Interest

An anonymous developer, posting under the username /u/Interesting-Ad4922, has garnered attention in the AI community for successfully deploying a 120-billion-parameter open-source language model—dubbed GPT-OSS-120b—on a dual NVIDIA RTX 5090 workstation. The achievement, shared on the Reddit forum r/LocalLLaMA, demonstrates the increasing feasibility of running extremely large language models (LLMs) on consumer-grade hardware, despite the absence of official documentation or public release of the model itself.

The user reported achieving a 128k token context window, a significant milestone for local inference, with performance sustained through aggressive CPU offloading, yielding approximately 10 tokens per second. While this rate is far below the throughput of cloud-based models like GPT-4 or Claude 3, it represents a major advancement in decentralized AI inference. The post, accompanied by a screenshot of terminal output and system metrics, has since attracted over 1,200 upvotes and hundreds of comments from developers, researchers, and hobbyists eager to replicate or refine the setup.

Though the model’s name suggests a lineage to OpenAI’s GPT series, there is no official connection to OpenAI’s GPT-2 or GPT-3 repositories on GitHub. The GPT-2 repository and GPT-3 repository contain only the original research code and model weights for models up to 175B parameters, released under strict licensing that prohibits commercial use and redistribution. GPT-OSS-120b, by contrast, appears to be a community-built variant, likely trained on publicly available datasets such as The Pile or Common Crawl, and optimized using open-source frameworks like llama.cpp, vLLM, or exLlamaV2.

The RTX 5090, while not yet officially announced by NVIDIA as of mid-2024, is widely speculated to be the next-generation successor to the RTX 4090, potentially featuring enhanced HBM3e memory, improved tensor cores, and higher power efficiency. If the user’s system indeed utilizes these hypothetical GPUs, it suggests that next-gen consumer hardware may soon rival data center-grade accelerators in parameter density for local inference. The use of CPU offloading—typically a performance bottleneck—indicates the model was likely quantized to 4-bit or 5-bit precision, a common technique in the local LLM community to reduce memory footprint without catastrophic loss of quality.

Experts in the field note that such deployments are becoming increasingly common as open-source models like Llama 3, Mistral, and Qwen reach 70B–120B parameter scales. "This isn’t just a technical stunt—it’s a sign of democratization," said Dr. Elena Torres, a computational linguist at Stanford’s AI Lab. "When individuals can run models of this scale on their desktops, it shifts power away from centralized cloud providers and into the hands of developers, educators, and privacy-conscious users."

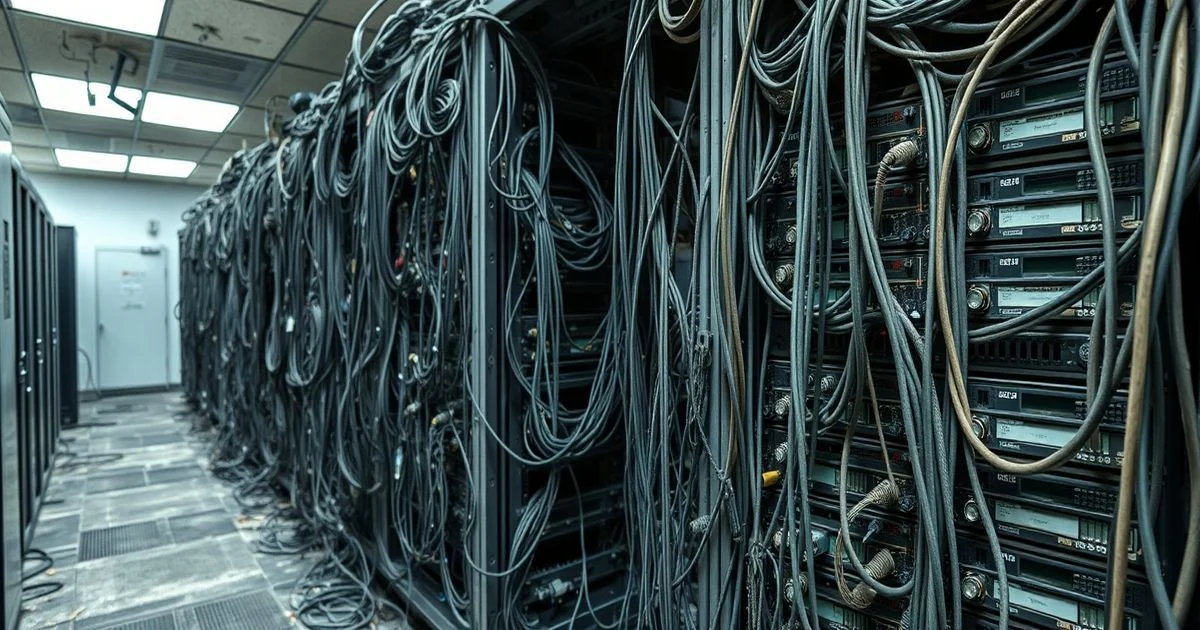

The broader implications extend beyond performance metrics. Local deployment reduces latency for real-time applications, eliminates data privacy risks associated with cloud APIs, and enables offline operation in regulated or low-connectivity environments. However, challenges remain: power consumption, heat dissipation, and software compatibility are non-trivial hurdles. The Reddit thread includes multiple requests for detailed configuration files, which the original poster has not yet released, fueling speculation about whether this is a one-off prototype or part of a larger open-source initiative.

As the AI community continues to push the boundaries of what’s possible on consumer hardware, this deployment serves as both a milestone and a call to action. Whether GPT-OSS-120b becomes a publicly released model or remains a personal triumph, its existence underscores a transformative trend: the era of the desktop supercomputer is no longer science fiction—it’s already here.